library(sna)

library(xUCINET)

library(igraph)

library(FactoMineR)

library(ergm)EM 1422, Ca’ Foscari University of Venice

DISCLAIMER:

This are personal notes taken while following the lesson of the course in Network Analytics, EM 1422, at Ca’ Foscari University of Venice. Any mistake made is mine: as you might expect, student notes are not peer reviewed and/or endorsed as official materials. I do not own any rights on this material. Feel free to write me at solar-san@pm.me if you spot mistakes or have any suggestions. The source code is freely available at GitHub.com. Please share, comment, and leave a star if you use these notes!

- Most of the

Rcode is taken from the required textbook, Analyzing Social Networks usingR. The companion website contains useful resources and all code can be retrieved from the companion website. - The pictures and tables in the Centrality and Dyadic sections are taken from prof. Giorgia Minello materials for a course about networks.

A special thanks to prof. Righi, for this awesome course and for allowing me to make this material freely available online.

The final project for this course is available at this link.

Intro: Network Theory

First and foremost: network theory is a interdisciplinary science that can be applied in a wide variety of contexts and disciplines, such as archaeology, engineering, social sciences, biology. Networks have properties encoded in their structure that limit or enhance their behaviour.

Measure: it regards information and how it moves across a network. Everything interesting about networks consists in the flow of information: it is therefore of primary interest for social scientists.

For sufficiently small networks, plotting is an essential first step in its analysis; for large networks, only statistical representations are possible.

The point of arrival is to be able to compute p-values (null networks) and meaningful regressions (econometric of networks) on networks.

What is a network?

It is an abstract representation of interactions between discrete (separated from each others) entities. It is then a model: a simplification of reality.

These entities are represented as nodes: we abstract from any not important characteristics of these entities and conceptualize them as points. These nodes can be connected; such connections are conceptualized by ties or edges. These can be of many different classes or types (e.g.: reciprocated or not).

Ties and nodes can have a set of attributes; they do not concern the network itself, but are nonetheless characteristics of them. They can be anyhow interesting, even as influences and determinants of the strength, presence or other features of network relations and nodes behaviour.

We are modelling complex systems: we first need to know how their components interact with each other. A network is a catalogue of a system components (nodes, vertices) and the interaction between them (link, edges). The two main features in such a model are attributes and networks. These characteristics are intertwined in complex way. How much of the behaviour causes the network and how much of the behaviour is caused by the network?

In other words, is it the network itself that causes a behaviour, or is the behaviour of the individuals that creates the network?

Networks and complex systems

What is a complex system? It is a system made of many heterogeneous interacting components.

Networks are a very efficient way to model such a system; however, heterogeneity might pose challenges while modelling using networks. Networks are important when the connection between their discrete elements is not trivial and cannot be expressed by using simple patterns or other mathematical models: complex networks have emerging behaviour, which cannot be expressed as a simple aggregate/pattern between its components.

These complex interconnections lead to fragility: a single point of failure might impact the whole network in unexpected ways, causing a chain of events affecting every interconnected node. There is a fallout effect that can propagate in unexpected ways, which can have serious consequences.

Fragility: small events that seem to impact a small subset of the network can have profound consequences on the whole system, reverberating and cascading through the connections between nodes.

Failures and behaviour can be modelled by a set of rules, with the aim to predict and forecast. Fragility is a strong issue: if not taken in account, the models are completely useless for predicting and understanding a complex behaviour. In a network, each stress point might reverberate throughout the whole system, causing system-wide breakdowns.

Economic thought is built, classically, on two concepts:

- Centralized markets.

- Games among few people with fixed rules, structures and identities.

However, there are many economic interactions where the social context is not a second-order consideration, but is actually a primary driver of behaviours and outcomes, such as trading and exchanging goods and services. Interactions are rarely anonymous, who you interact with is relevant for behaviour and outcomes: e.g., the position of a person in a social network matters for micro outcomes; frequently, the topology of interactions determines the macro outcomes of the system.

Mapping nodes is a fundamental tool to understand and model them: by mapping nodes and connections, weighting the flow of information between them, it is possible to see that most of the times there are patterns emerging. In other words, networks are mostly not random.

There are many types of networks/graphs depending on their edges:

- undirected graph: represents symmetric relationships. The existence of a relationship between A and B implies a relationship between B and A.

- directed graph: represents asymmetric relationships (e.g.: paper A cites B; the inverse is usually not true).

A network is called directed (or digraph) if all of its links are directed.; it is called undirected if all of its links are undirected. Some networks can have simultaneously both types of links.

non-weighted graph: where edges are weighted equally.

weighted graph: where edges are weighted differently (e.g.: trade networks).

signed networks: where edges can have positive or negative valence.

multi-layer networks: in which the same entities can have multiple types of relationships (e.g.: family and friends).

hyper-networks: networks in which edges connect more than two vertices.

This course mainly studies undirected non-weighted single-layer graphs/networks.

Graph & Networks

- {Network, node, link}: refers to a real system.

- {Graph, vertex, edge}: mathematical representation of a network.

Basic networks parameters are:

- The number of nodes, or . Represents the number of components of the system. It is often called the size of the network.

- To distinguish them, nodes are labelled: .

- A key characteristic of each node is its degree, representing the number of links it has to other nodes.

- The number of links, or , represents the number of interactions between the nodes.

- They are rarely labelled, as they can be identified through the nodes they connect.

- In an undirected network, the total number of links can be expressed as the sum of the node degrees: The corrects for the fact that in the sum each link is counted twice.

- In a directed network, instead:

The average degree for an undirected network is;

The average degree for a directed network is:

In directed networks it is important to distinguish between:

- incoming degree, : number of nodes that point to node .

- outgoing degree, : number of nodes that point from node to other nodes.

- node total degree, :

Graphs: graphs are a mathematical concept defined as a set of:

- set of vertices (V).

- set of edges (E) between vertices.

It is then a set composed of two subsets. It is a mathematical definition: graphs is the specialistic term, while network is used more generally in other disciplines. They can also include:

- attributes of vertices and edges.

The degree distribution provides the probability that a randomly selected node in the network has degree k. For a network with nodes, the degree distribution is the normalised histogram given by: where is the number of degree- nodes. The calculation of most network properties requires to know . The average degree of a network can be re-written as:

The functional form of determines many network phenomena.

Computational representations of a network

Simply drawing graphs does not allow to perform computations on them. We need a compact syntax that also allows to perform mathematics on them. The most common representations are:

- Adjacency matrix: a square matrix, where each element of the matrix indicates whether the pairs of vertices are adjacent in the network (e.g. directly connected).

- Adjacency list/Edge list: a list with two columns, one indicating the point of departure and one the point of arrival of a tie. A third column might be added to indicate the weight associated to each tie.

- Node list: for each note, the list of its connections is reported.

Adjacency matrix:

The adjacency matrix allows to keep track of its links by providing a complete list of them. The adjacency matrix for a network of nodes as rows and columns.

This kind of matrix can have only values 1 or 0 if we are dealing with an unweighed network; moreover, it is a symmetric matrix (links are reciprocated). In case of a undirected network, the matrix is asymmetric while edges can as well either have value 0 or 1 (or NA); the order is important: rows and columns are symmetric and must be ordered in the same way (e.g.: if row 3 contains data about node A its links, then column 3 must contain the same data and be about node A).

The degree of node can be obtained directly from the elements of the adjacency matrix. For undirected networks: It is then a sum over either the rows or the columns of the matrix.

Given that in an undirected network the number of outgoing links equal the number of incoming links, we have: In other words, the number of non-zero elements of the adjacency matrix is , or twice the number of links.

For directed networks, the sum over the adjacency matrix row and columns provide either the incoming or out coming degrees:

- Advantages:

- Systematic representation of all ties, in a compact form.

- Algebra can be applied.

- Disadvantages:

- If the network is sparse (few connections are active), a lot of space is occupied by useless zeros (representing no ties).

- No empty cells can be left in the matrix, otherwise programming languages might misinterpret data.

- We necessarily need square matrices.

- Need to check if:

- If the network is undirected: is the matrix symmetrical?

- Are self nominations possible? Otherwise, the diagonal must contains only 0s.

- Are labels used unique and avoid special characters?

Edge list

- Advantages:

- Saves computational resources.

- Easy to read through.

- Disadvantages:

- Matrix algebra cannot be applied directly.

- Need to check if:

- The datasets contains 2/3 lines.

- Isolates are excluded and will need to be added manually.

- Check that both direction of ties (in directed networks) are present in the list if relevant.

- Are names/labels used short and without special characters?

Node list:

- Advantages:

- Saves computational resources.

- Disadvantages:

- Matrix algebra cannot be applied directly.

- Sometimes slower for large networks.

- Requires more complex data structures (lists).

- Need to check if:

- Are isolates included with an empty line?

- Is the first column the name of the node or of the first connection?

- Did I include links in both the sender and the receiver list in undirected lists?

- Are names/labels used short and without special characters?

Subgraphs

Other meaningful definitions:

Network size: the number of nodes (statistical physics/theory of complex networks).

Density of a network: number of its edges divided by the total number of possible pair of nodes (which is the maximum number of links that could possibly exist in the network).

- A network is a subgraph of network if .

- A complete network is a network that contains all the possible links between its nodes and has therefore density .

- Clique: it is a complete subgraph in which all the possible ties are formed.

Networks in R

These are the packages required to work with networks in R:

To operate with adjacency matrix we need to convert each loaded files into an array. Before creating a project, it’s good practice to compute all the data manipulations necessary.

Friends <- read.csv(

paste0(

files_path,

"Friends.csv"

),

header = T,

sep = ";",

row.names = 1) |>

as.matrix()

Friends Ana Ella Isra Petr Sam

Ana 0 2 1 3 2

Ella 1 0 3 1 3

Isra 3 1 0 2 1

Petr 2 1 2 0 1

Sam 3 2 1 1 0To work with a network project, we use the command xCreateProject.

SmallProject <-

xCreateProject(GeneralDescription = "A small 5 people study", #description of the project

NetworkName = "Friends", #name of the network

References = "", #needed to put citations or references

NETFILE = Friends, #name of the data file

FileType = "Robject", #type of the file

InFormatType = "AdjMat", #format of the file. In this case, adjacency matrix

NetworkDescription="Friendship networks among 5 people with 3=Good friend, 2=Friend and 1=Acquaintance",

Mode = c("People"), #type of nodes; cells, persons, firms, ...

Directed = T,

Loops = F,

Values = "Ordinal",

Class = "matrix"

)

------ FUNCTION: xCreateProject ---------------------------

- Basic checks performed on the argument for "xCreateProject".

- Data imported: [ 0 1 3 2 3 2 0 1 1 2 1 3 0 2 1 3 1 2 0 1 2 3 1 1 0 ] and named as: [ Friends ]

---------------------------------------------------------------With network projects you need to have a complex data structure in which to store all the information needed to describe it. This becomes especially relevant when working with big projects.

To access the adjacency matrix:

SmallProject$Friends Ana Ella Isra Petr Sam

Ana 0 2 1 3 2

Ella 1 0 3 1 3

Isra 3 1 0 2 1

Petr 2 1 2 0 1

Sam 3 2 1 1 0To attach attributes to your network:

Attributes <- read.csv(

paste0(

files_path,

"Attributes.csv"

),

header = T,

sep = ";",

row.names = 1

)

SmallProject <-

xAddAttributesToProject(

ProjectName = SmallProject,

ATTFILE1 = Attributes,

FileType = "Robject",

Mode = c("People"),

AttributesDescription = c(

'Age in years', 'Gender (1 = Male, 2 = Female")', "Preferred Music"

)

)

------ FUNCTION: xAddAttributesToProject ---------------------------

Getting "dataproject" with name [SmallProject].

Attribute file [Attributes] imported**FUNDAMENTAL: network attributes might be stored in a

dataframe(adjacency matrices must be stored as arrays instead*).**

SmallProject$Attributes NodeName Age Gender Music

1 Ana 23 1 Reggae

2 Ella 67 1 Rock

3 Isra 45 1 Pop

4 Petr 28 2 Reggae

5 Sam 33 2 PopCalling the project object prints data frames with all the relevant informations:

SmallProject$ProjectInfo

$ProjectInfo$GeneralDescription

[1] "A small 5 people study"

$ProjectInfo$Modes

[1] "People"

$ProjectInfo$AttributesDescription

Variable Mode Details

1 NodeName People Names of the nodes for mode People

2 Age People Age in years

3 Gender People Gender (1 = Male, 2 = Female")

4 Music People Preferred Music

$ProjectInfo$NetworksDescription

NetworkName

1 Friends

Details

1 Friendship networks among 5 people with 3=Good friend, 2=Friend and 1=Acquaintance

$ProjectInfo$References

[1] ""

$Attributes

NodeName Age Gender Music

1 Ana 23 1 Reggae

2 Ella 67 1 Rock

3 Isra 45 1 Pop

4 Petr 28 2 Reggae

5 Sam 33 2 Pop

$NetworkInfo

NetworkName ModeSender ModeReceiver Directed Loops Values Class

1 Friends People People TRUE FALSE Ordinal matrix

$Friends

Ana Ella Isra Petr Sam

Ana 0 2 1 3 2

Ella 1 0 3 1 3

Isra 3 1 0 2 1

Petr 2 1 2 0 1

Sam 3 2 1 1 0We now want to add another network to an existing project:

Communication <- read.csv(

paste0(files_path, "communication.csv"),

header = T,

sep = ";",

row.names = 1

) |>

as.matrix()

SmallProject<-xAddToProject(ProjectName=SmallProject,

NetworkName="Communication",

NETFILE1=Communication,

FileType="Robject",

NetworkDescription="Communication network",

Mode=c("People"),

Directed=FALSE,

Loops=FALSE,

Values="Binary",

Class="matrix")

------ FUNCTION: xAddToProject ---------------------------

ProjectName: SmallProjectNumber of existing networks: 1

NetworkName: Communication

- Basic checks performed on the argument for "xAddToProject".

- Data imported: [ 0 1 0 1 1 1 0 0 1 0 0 0 0 1 0 1 1 1 0 1 1 0 0 1 0 ] and named: [ Communication ]

---------------------------------------------------------------Friends is a valued network, we can dichotomized it by using:

dichotomized_friend_network <- xDichotomize(

SmallProject$Friends,

Value=1,

Type="GT"

)

dichotomized_friend_network Ana Ella Isra Petr Sam

Ana 0 1 0 1 1

Ella 0 0 1 0 1

Isra 1 0 0 1 0

Petr 1 0 1 0 0

Sam 1 1 0 0 0The density is an important statistic to describe a network:

density_of_communication<-xDensity(dichotomized_friend_network)

. ------------------------------------------------------------------

. Number of valid cells: 20

. which corresponds to: 100 % of considered cells.

. ------------------------------------------------------------------ To reverse the direction of each tie of a network, you simply need to transpose the adjacency matrix.

IsFriendOf<-xTranspose(SmallProject$Friends)

IsFriendOf Ana Ella Isra Petr Sam

Ana 0 1 3 2 3

Ella 2 0 1 1 2

Isra 1 3 0 2 1

Petr 3 1 2 0 1

Sam 2 3 1 1 0For the following part, we switch to the BakerJournals network:

Baker_Journals$ProjectInfo

$ProjectInfo$ProjectName

[1] "Baker_Journals"

$ProjectInfo$GeneralDescription

[1] "Donald Baker collected data on the citations among 20 social work journals for 1985-1986. The data consist of the number of citations from articles in one journal to articles in another journal. Using hierarchical cluster analysis, he found that the network seems to have a core-periphery structure, and argues that American journals tend to be less likely to involve international literature."

$ProjectInfo$AttributesDescription

NULL

$ProjectInfo$NetworksDescription

[1] "Citations: Number of citations"

$ProjectInfo$References

[1] "Baker, D. R. (1992). A Structural Analysis of Social Work Journal Network: 1985-1986. Journal of Social Service Research, 15(3-4), 153-168."

$Attributes

NULL

$NetworkInfo

Name Mode Directed Diagonal Values Class

1 Citations A TRUE FALSE Ratio matrix

$CoCitations

AMH ASW BJSW CAN CCQ CSWJ CW CYSR FR IJSW JGSW JSP JSWE PW SCW SSR SW

AMH 13 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3

ASW 0 70 0 0 0 0 0 0 0 0 0 0 18 13 0 21 73

BJSW 0 0 95 0 0 0 0 0 0 0 0 0 13 0 0 0 19

CAN 0 0 0 109 0 0 9 0 0 0 0 0 0 0 6 0 8

CCQ 0 0 0 0 92 0 12 0 0 0 0 0 0 0 3 0 0

CSWJ 0 0 0 0 0 40 0 0 0 0 0 0 0 0 41 20 45

CW 0 0 0 7 0 0 187 6 0 0 0 0 11 7 32 10 58

CYSR 0 0 0 12 5 0 70 26 4 0 0 0 0 6 8 14 28

FR 0 0 0 0 0 0 0 0 205 0 0 0 0 0 18 0 9

IJSW 0 0 0 0 0 0 0 0 0 6 0 0 0 0 0 0 3

JGSW 0 0 0 0 0 0 0 0 0 0 9 0 0 0 18 0 18

JSP 0 0 0 0 0 0 0 0 0 0 0 35 0 0 0 7 0

JSWE 0 9 0 0 0 0 0 0 0 0 0 0 104 0 18 16 58

PW 0 0 0 7 0 0 4 0 0 0 0 0 0 9 0 0 0

SCW 0 8 0 6 0 8 17 0 6 0 6 0 21 0 149 36 124

SSR 0 7 0 0 0 0 17 0 0 0 0 0 9 0 30 105 106

SW 0 15 0 0 0 0 52 0 9 0 0 0 33 19 58 63 366

SWG 0 0 2 0 0 0 0 0 0 0 0 0 9 0 9 7 40

SWHC 0 0 0 0 0 0 0 0 0 0 0 0 0 0 20 0 26

SWRA 0 0 0 0 0 0 8 0 0 0 0 0 24 0 8 39 44

SWG SWHC SWRA

AMH 0 0 0

ASW 0 0 7

BJSW 0 0 0

CAN 0 0 0

CCQ 0 0 0

CSWJ 0 0 0

CW 0 0 0

CYSR 0 0 6

FR 0 0 0

IJSW 0 0 0

JGSW 0 0 0

JSP 0 0 0

JSWE 0 7 16

PW 0 0 0

SCW 8 6 18

SSR 0 0 25

SW 15 43 8

SWG 41 9 0

SWHC 0 86 0

SWRA 0 0 40xSymmetrize allows for symmetrization of a network. In this case, we make the network symmetric by setting the value of ties respectively to Max and Min:

xSymmetrize(Baker_Journals$CoCitations, Type = "Max") AMH ASW BJSW CAN CCQ CSWJ CW CYSR FR IJSW JGSW JSP JSWE PW SCW SSR SW

AMH 13 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 3

ASW 0 70 0 0 0 0 0 0 0 0 0 0 18 13 8 21 73

BJSW 0 0 95 0 0 0 0 0 0 0 0 0 13 0 0 0 19

CAN 0 0 0 109 0 0 9 12 0 0 0 0 0 7 6 0 8

CCQ 0 0 0 0 92 0 12 5 0 0 0 0 0 0 3 0 0

CSWJ 0 0 0 0 0 40 0 0 0 0 0 0 0 0 41 20 45

CW 0 0 0 9 12 0 187 70 0 0 0 0 11 7 32 17 58

CYSR 0 0 0 12 5 0 70 26 4 0 0 0 0 6 8 14 28

FR 0 0 0 0 0 0 0 4 205 0 0 0 0 0 18 0 9

IJSW 0 0 0 0 0 0 0 0 0 6 0 0 0 0 0 0 3

JGSW 0 0 0 0 0 0 0 0 0 0 9 0 0 0 18 0 18

JSP 0 0 0 0 0 0 0 0 0 0 0 35 0 0 0 7 0

JSWE 0 18 13 0 0 0 11 0 0 0 0 0 104 0 21 16 58

PW 0 13 0 7 0 0 7 6 0 0 0 0 0 9 0 0 19

SCW 0 8 0 6 3 41 32 8 18 0 18 0 21 0 149 36 124

SSR 0 21 0 0 0 20 17 14 0 0 0 7 16 0 36 105 106

SW 3 73 19 8 0 45 58 28 9 3 18 0 58 19 124 106 366

SWG 0 0 2 0 0 0 0 0 0 0 0 0 9 0 9 7 40

SWHC 0 0 0 0 0 0 0 0 0 0 0 0 7 0 20 0 43

SWRA 0 7 0 0 0 0 8 6 0 0 0 0 24 0 18 39 44

SWG SWHC SWRA

AMH 0 0 0

ASW 0 0 7

BJSW 2 0 0

CAN 0 0 0

CCQ 0 0 0

CSWJ 0 0 0

CW 0 0 8

CYSR 0 0 6

FR 0 0 0

IJSW 0 0 0

JGSW 0 0 0

JSP 0 0 0

JSWE 9 7 24

PW 0 0 0

SCW 9 20 18

SSR 7 0 39

SW 40 43 44

SWG 41 9 0

SWHC 9 86 0

SWRA 0 0 40xSymmetrize(Baker_Journals$CoCitations, Type = "Min") AMH ASW BJSW CAN CCQ CSWJ CW CYSR FR IJSW JGSW JSP JSWE PW SCW SSR SW

AMH 13 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

ASW 0 70 0 0 0 0 0 0 0 0 0 0 9 0 0 7 15

BJSW 0 0 95 0 0 0 0 0 0 0 0 0 0 0 0 0 0

CAN 0 0 0 109 0 0 7 0 0 0 0 0 0 0 6 0 0

CCQ 0 0 0 0 92 0 0 0 0 0 0 0 0 0 0 0 0

CSWJ 0 0 0 0 0 40 0 0 0 0 0 0 0 0 8 0 0

CW 0 0 0 7 0 0 187 6 0 0 0 0 0 4 17 10 52

CYSR 0 0 0 0 0 0 6 26 0 0 0 0 0 0 0 0 0

FR 0 0 0 0 0 0 0 0 205 0 0 0 0 0 6 0 9

IJSW 0 0 0 0 0 0 0 0 0 6 0 0 0 0 0 0 0

JGSW 0 0 0 0 0 0 0 0 0 0 9 0 0 0 6 0 0

JSP 0 0 0 0 0 0 0 0 0 0 0 35 0 0 0 0 0

JSWE 0 9 0 0 0 0 0 0 0 0 0 0 104 0 18 9 33

PW 0 0 0 0 0 0 4 0 0 0 0 0 0 9 0 0 0

SCW 0 0 0 6 0 8 17 0 6 0 6 0 18 0 149 30 58

SSR 0 7 0 0 0 0 10 0 0 0 0 0 9 0 30 105 63

SW 0 15 0 0 0 0 52 0 9 0 0 0 33 0 58 63 366

SWG 0 0 0 0 0 0 0 0 0 0 0 0 0 0 8 0 15

SWHC 0 0 0 0 0 0 0 0 0 0 0 0 0 0 6 0 26

SWRA 0 0 0 0 0 0 0 0 0 0 0 0 16 0 8 25 8

SWG SWHC SWRA

AMH 0 0 0

ASW 0 0 0

BJSW 0 0 0

CAN 0 0 0

CCQ 0 0 0

CSWJ 0 0 0

CW 0 0 0

CYSR 0 0 0

FR 0 0 0

IJSW 0 0 0

JGSW 0 0 0

JSP 0 0 0

JSWE 0 0 16

PW 0 0 0

SCW 8 6 8

SSR 0 0 25

SW 15 26 8

SWG 41 0 0

SWHC 0 86 0

SWRA 0 0 40To analyse and compare networks a correlation measure is employed. It is necessary to transform then into vectors first:

cor(c(SmallProject$Friends),c(SmallProject$Communication))[1] 0.29116However, in this case, the diagonal is all composed of 0s, which is influencing the computations. This is caused by the fact that in this network people cannot be in a relationship with themselves; then, it is necessary to transform them into NA.

T1 <- SmallProject$Friends

diag(T1) <- NA

T2 <- SmallProject$Communication

diag(T2) <- NA

cor(c(T1),c(T2),use="complete.obs")[1] -0.07537784Another interesting computation is to take attributes of a network nodes and transform then into another separated network: in this example, we are playing with the age attributes and we are interested in its absolute difference among individuals.

agediff <- xAttributeToNetwork(SmallProject$Attributes[,2], Type = "AbsDiff")*1

xNormalize(agediff, Type = "Max") [,1] [,2] [,3] [,4] [,5]

[1,] 0.0000000 1 0.5000000 0.1136364 0.2272727

[2,] 1.0000000 0 0.5000000 0.8863636 0.7727273

[3,] 1.0000000 1 0.0000000 0.7727273 0.5454545

[4,] 0.1282051 1 0.4358974 0.0000000 0.1282051

[5,] 0.2941176 1 0.3529412 0.1470588 0.0000000The xNormalize network can help with dichotomize, because it allows you to spot the natural cut-off point in your data.

The shortest path among nodes (geodesic distance) can be computed with:

xGeodesicDistance(SmallProject$Communication) Ana Ella Isra Petr Sam

Ana 0 1 2 1 1

Ella 1 0 2 1 2

Isra 2 2 0 1 2

Petr 1 1 1 0 1

Sam 1 2 2 1 0Each matrix element represent the distance among the network nodes. In this networks, for example, you need to cross two different ties to move from Ella to Isra.

To store your project for later use:

save(SmallProject, file = paste0(files_path, "ClassProject.RData"))To work with the sna library, you can convert your project by calling the as.network function:

snafile.n <- as.network(SmallProject$Friends, directed = TRUE)

snafile.n Network attributes:

vertices = 5

directed = TRUE

hyper = FALSE

loops = FALSE

multiple = FALSE

bipartite = FALSE

total edges= 20

missing edges= 0

non-missing edges= 20

Vertex attribute names:

vertex.names

No edge attributesWith igraph, instead, call graph_from_adjacency_matrix:

igraph_file.i <- graph_from_adjacency_matrix(SmallProject$Friends,

mode = c("directed"), diag = FALSE)

igraph_file.iIGRAPH 0f0edc5 DN-- 5 36 --

+ attr: name (v/c)

+ edges from 0f0edc5 (vertex names):

[1] Ana ->Ella Ana ->Ella Ana ->Isra Ana ->Petr Ana ->Petr Ana ->Petr

[7] Ana ->Sam Ana ->Sam Ella->Ana Ella->Isra Ella->Isra Ella->Isra

[13] Ella->Petr Ella->Sam Ella->Sam Ella->Sam Isra->Ana Isra->Ana

[19] Isra->Ana Isra->Ella Isra->Petr Isra->Petr Isra->Sam Petr->Ana

[25] Petr->Ana Petr->Ella Petr->Isra Petr->Isra Petr->Sam Sam ->Ana

[31] Sam ->Ana Sam ->Ana Sam ->Ella Sam ->Ella Sam ->Isra Sam ->PetrHow to work with edge lists

xUCINET can import only adjacency matrices; a workaround could the following:

hp5edges <- read.table(

paste0(

files_path, 'Friends_edgelist.csv'

),

sep = ';',

header = F

)

hp5edges |> head() V1 V2 V3

1 Ana Ella 2

2 Ana Isra 1

3 Ana Petr 3

4 Ana Sam 2

5 Ella Ana 1

6 Ella Isra 3After some data preparation, the code is quite straightforward:

colnames(hp5edges) <- c("from","to","w")

hp5edgesmat <- as.matrix(hp5edges) #igraph wants our data in matrix format

hp5net <- graph_from_edgelist(hp5edgesmat[,1:2], directed=TRUE)

MyAdj <- as_adjacency_matrix(

hp5net,

type = c("both"),

attr = NULL,

names = TRUE,

sparse = FALSE

)One of the most used tools to visualize network is called Pajek, which needs the igraph library to work. It is essentially a list of nodes and edges.

yeast_igraph <- read_graph(

paste0(

files_path, 'YeastS.net'

),

format = c("pajek")

) |>

as_adjacency_matrix(type= 'both', attr = NULL, names = T, sparse = F)A classic visualization of a complex network is:

par(mar= c(0, 0, 0, 0))

gplot(yeast_igraph, gmode = 'graph')

Data visualization: how to study networks

After importing data in R to build a project, we can start to study it: there are two different approaches.

- Data visualization: observing a network and organizing it can lead to insights about its dynamic and the underlying complex system.

- It often works properly only for small networks, with 10-100 nodes and a few edges.

- Statistical properties: they characterize the structure of the network and are useful to abstract from the present cases to create models of networks structures and compare different networks.

- It is the best method to deal with large networks.

In visualizing a network the position in which we place the nodes relative to each other is important to obtain clarity. There are many options: these methods are useful to associate position and information.

- Create a circle of equally separated nodes. This is usually the first approach.

- Use a categorical attribute to plot clusters similar nodes.

- Use a continuous attribute to assign them a coordinate in the visualization space.

- Optimizing node locations according to some algorithms; in this case, the aim is to enhance visualization and avoid overlapping.

When using a weighted network, dichotomization is essential to achieve a meaningful visualization. Remember that setting the dichotomization level is a fundamental aspect of studying a network and the optimal level depends on the task at hand.

Optimization algorithms

The two most used algorithm, used when the position of the nodes is not relevant to the analysis and we want to have a clear representation of a network are:

- Fruchterman and Reingold (1991)

It considers the network as a collection of magnets and springs. All the springs exert the same force and all the magnets have the same polarity: the former tend to push each other apart, while the springs keep them together.

The system starts from a random position and you simply wait for the system to reach a level of minimal entropy, or a state of equilibrium. An iterative process is used to determine that state.

The resulting network has ties which have a similar (or equal) length and unconnected nodes are positioned far from each others.

- Kamada Kaway (1989)

Only springs forces are present; geodesic distance is computed and is used to determine the power of the attraction between two pair of nodes.

Again, the system is in equilibrium as it reaches its minimum energy level. The resulting network has densely connected set of nodes placed close to each other.

With either algorithm there is no unique optimal solution, starting from random positions: this means that there might be multiple local minima, some of them undesirable.

Plotting graphs in R

Some information about the dataset (already loaded with xUCINET):

Krackhardt_HighTech$ProjectInfo$ProjectName

[1] "Krackhardt_HighTech."

$GeneralDescription

[1] "These are data collected by David Krackhardt among the managers of a high-tech company. The company manufactured high-tech equipment on the West Coast of the United States and had just over 100 employees with 21 managers. Contains three types of network relations and four attributes."

$AttributesDescription

[1] "Age: the managers age (in years)"

[2] "Tenure: the length of service or tenure (in years)"

[3] "Level: the level in the corporate hierarchy (coded 1=CEO, 2=Vice President, 3=Manager)"

[4] "Department: a nominal coding for the department (coded 1, 2, 3, 4, with the CEO in department 0, i.e., not in a department)"

$NetworksDescription

[1] "Advice: who the manager went to for advice"

[2] "Friendship: who the manager considers a friend"

[3] "ReportTo: whom the manager reported to, taken from company documents"

$References

[1] "Krackhardt D. (1987). Cognitive social structures. Social Networks, 9, 104-134."

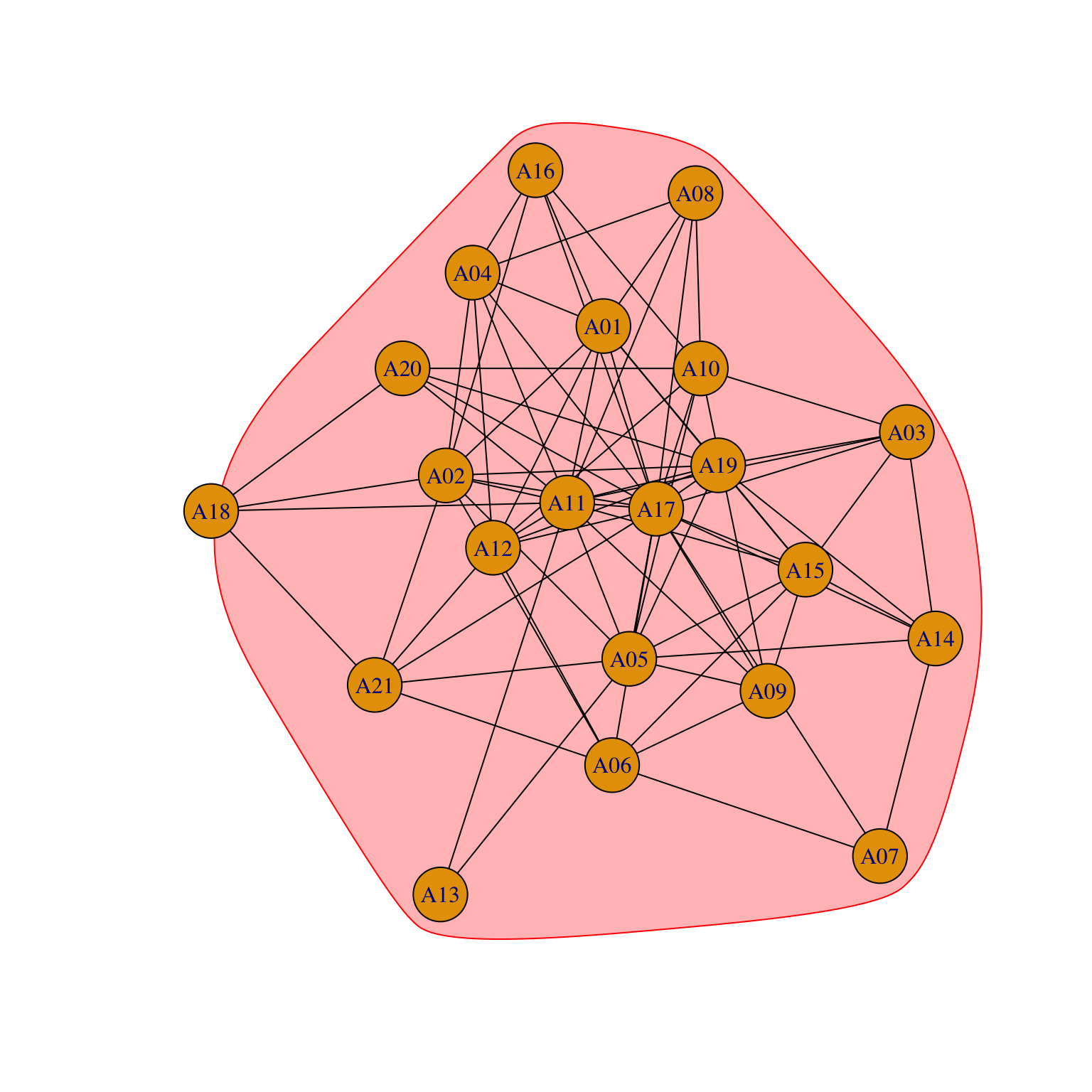

[2] "Wasserman S and K Faust (1994). Social Network Analysis: Methods and Applications. Cambridge University Press, Cambridge."First, we want to look at the friendship network:

Krackhardt_HighTech$Friendship A01 A02 A03 A04 A05 A06 A07 A08 A09 A10 A11 A12 A13 A14 A15 A16 A17 A18 A19

A01 0 1 0 1 0 0 0 1 0 0 0 1 0 0 0 1 0 0 0

A02 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0

A03 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 1

A04 1 1 0 0 0 0 0 1 0 0 0 1 0 0 0 1 1 0 0

A05 0 1 0 0 0 0 0 0 1 0 1 0 0 1 0 0 1 0 1

A06 0 1 0 0 0 0 1 0 1 0 0 1 0 0 0 0 1 0 0

A07 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

A08 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

A09 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

A10 0 0 1 0 1 0 0 1 1 0 0 1 0 0 0 1 0 0 0

A11 1 1 1 1 1 0 0 1 1 0 0 1 1 0 1 0 1 1 1

A12 1 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0

A13 0 0 0 0 1 0 0 0 0 0 1 0 0 0 0 0 0 0 0

A14 0 0 0 0 0 0 1 0 0 0 0 0 0 0 1 0 0 0 0

A15 1 0 1 0 1 1 0 0 1 0 1 0 0 1 0 0 0 0 1

A16 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

A17 1 1 1 1 1 1 1 1 1 1 1 1 0 1 1 1 0 0 1

A18 0 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

A19 1 1 1 0 1 0 0 0 0 0 1 1 0 1 1 0 0 0 0

A20 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 1 0

A21 0 1 0 0 0 0 0 0 0 0 0 1 0 0 0 0 1 1 0

A20 A21

A01 0 0

A02 0 1

A03 0 0

A04 0 0

A05 0 1

A06 0 1

A07 0 0

A08 0 0

A09 0 0

A10 1 0

A11 0 0

A12 0 1

A13 0 0

A14 0 0

A15 0 0

A16 0 0

A17 1 1

A18 0 0

A19 1 0

A20 0 0

A21 0 0We first want to symmetrize this network:

#method 1

Krackhardt_HighTech$Friendship_SymMin<-(Krackhardt_HighTech$Friendship)*t(Krackhardt_HighTech$Friendship)

#method 2

Krackhardt_HighTech$Friendship_SymMin2<-xSymmetrize(Krackhardt_HighTech$Friendship,Type="Min")Are they the same?

sum(Krackhardt_HighTech$Friendship_SymMin - Krackhardt_HighTech$Friendship_SymMin2)[1] 0Yep.

Let’s look at all the objects contained in the network data:

names(Krackhardt_HighTech)[1] "ProjectInfo" "Attributes" "NetworkInfo"

[4] "Advice" "Friendship" "ReportTo"

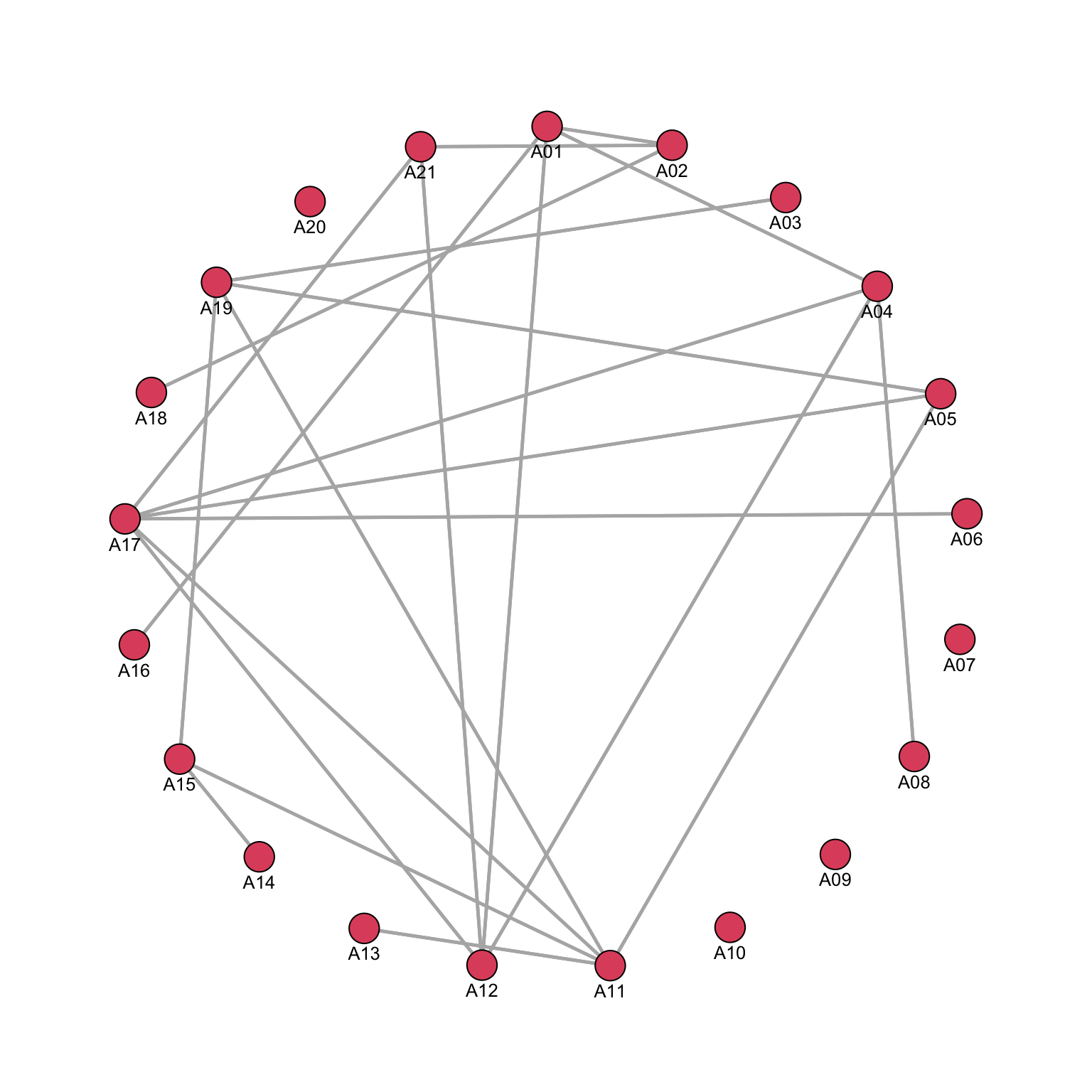

[7] "Friendship_SymMin" "Friendship_SymMin2"We can use the gplot() function in sna to visualize the network, where we specify how the points/nodes should be positioned in the 2-dimensional space. Here we position them in a circle (mode=“circle”):

par(mar = c(0, 0, 0, 0))

gplot(Krackhardt_HighTech$Friendship_SymMin, mode="circle")

We can immediately see that event though we symmetrized the network, we have a representation of a directed network, even though by construction we do not have built one. We need to specify that we are not working with a directed network, which is the default option:

par(mar = c(0, 0, 0, 0))

gplot(Krackhardt_HighTech$Friendship_SymMin, mode="circle", gmode="graph")

gmode helps in solving the issue: the options are + graph: undirected, + digraph (default): directed, + twomode: twomode.

To reduce the dimension of the nodes, we can use the argument vertex.cex:

gplot(Krackhardt_HighTech$Friendship_SymMin, mode="circle", gmode="graph",vertex.cex=.8)

To enhance the visualization, with such a small network, labels can be added to nodes.

par(mar = c(0, 0, 0, 0))

gplot(Krackhardt_HighTech$Friendship_SymMin,

gmode="graph", # type of network: undirected

mode="circle", # how the nodes are positioned

vertex.cex=.8, # the size of the node shapes

displaylabels=TRUE, # to add the node labels

label.pos=1, # to position the labels below the node shapes

label.cex=.8, # to decrease the size of the node labels

edge.col="grey70") # to make the colour of the ties/edges 30% black and 70% white

We can already see that while some nodes are particularly connected, some are not: we can also check whether there are some signs of error in our code or in the data, which might require correction of further cleaning.

In this case, while putting nodes in a circle works, we can start to look at different types of visualization.

We will use another database:

par(mar = c(0, 0, 0, 0))

Wolfe_Primates$ProjectInfo$ProjectName

[1] "Wolfe_Primates."

$GeneralDescription

[1] "These data represent 3 months of interactions among a troop of monkeys, observed in the wild by Linda Wolfe as they sported by a river in Ocala, Florida."

$AttributesDescription

[1] "Gender: 1=male, 2=female"

[2] "Age: age in years"

[3] "Rank: rank in the troop, with 1 being highest"

$NetworksDescription

[1] "JointPresence: Joint presence at the river was coded as an interaction and these were summed within all pairs"

[2] "Kinship: Indicates the putative kin relationships among the animals: 18 may be the granddaughter of 19"

$References

NULLThis dataset is useful because we have age and rank which are attributes that might be clearly used to produce an informative visualization.

Wolfe_Primates$Attributes Gender Age Rank

A01 1 15 1

A02 1 10 2

A03 1 10 3

A04 1 8 4

A05 1 7 5

A06 2 15 10

A07 2 5 11

A08 2 11 6

A09 2 8 12

A10 2 9 9

A11 2 16 7

A12 2 10 8

A13 2 14 18

A14 2 5 19

A15 2 7 20

A16 2 11 13

A17 2 7 14

A18 2 5 15

A19 2 15 16

A20 2 4 17Ideally we would like highly ranked individuals on the top of the figure, and then in decreasing order. By default however a rank assign low values to top positions. As a solution, we can reverse the order:

Wolfe_Primates$Attributes<-cbind(Wolfe_Primates$Attributes,Rank_Rev=max(Wolfe_Primates$Attributes[,3])+1-Wolfe_Primates$Attributes$Rank)

Wolfe_Primates$Attributes Gender Age Rank Rank_Rev

A01 1 15 1 20

A02 1 10 2 19

A03 1 10 3 18

A04 1 8 4 17

A05 1 7 5 16

A06 2 15 10 11

A07 2 5 11 10

A08 2 11 6 15

A09 2 8 12 9

A10 2 9 9 12

A11 2 16 7 14

A12 2 10 8 13

A13 2 14 18 3

A14 2 5 19 2

A15 2 7 20 1

A16 2 11 13 8

A17 2 7 14 7

A18 2 5 15 6

A19 2 15 16 5

A20 2 4 17 4The network data are valued and represent how often two monkeys are spotted together:

Wolfe_Primates$JointPresence A01 A02 A03 A04 A05 A06 A07 A08 A09 A10 A11 A12 A13 A14 A15 A16 A17 A18 A19

A01 0 2 10 4 5 5 9 7 4 3 3 7 3 2 5 1 4 1 0

A02 2 0 5 1 3 1 4 2 6 2 5 4 3 2 2 6 3 1 1

A03 10 5 0 8 9 5 11 7 8 8 14 17 9 11 11 5 9 4 6

A04 4 1 8 0 4 0 3 4 2 3 5 3 11 4 7 0 4 3 3

A05 5 3 9 4 0 3 5 7 4 3 5 6 3 4 4 1 2 1 3

A06 5 1 5 0 3 0 5 2 3 2 2 4 4 3 1 1 2 0 1

A07 9 4 11 3 5 5 0 5 4 6 3 9 5 5 4 2 6 3 2

A08 7 2 7 4 7 2 5 0 3 0 3 4 2 1 3 0 1 1 1

A09 4 6 8 2 4 3 4 3 0 1 3 2 4 5 4 3 4 1 3

A10 3 2 8 3 3 2 6 0 1 0 4 7 5 5 7 2 2 3 3

A11 3 5 14 5 5 2 3 3 3 4 0 9 3 4 4 2 4 2 3

A12 7 4 17 3 6 4 9 4 2 7 9 0 7 7 8 3 7 2 4

A13 3 3 9 11 3 4 5 2 4 5 3 7 0 8 11 3 8 2 5

A14 2 2 11 4 4 3 5 1 5 5 4 7 8 0 8 1 5 4 4

A15 5 2 11 7 4 1 4 3 4 7 4 8 11 8 0 2 5 2 2

A16 1 6 5 0 1 1 2 0 3 2 2 3 3 1 2 0 6 1 0

A17 4 3 9 4 2 2 6 1 4 2 4 7 8 5 5 6 0 4 3

A18 1 1 4 3 1 0 3 1 1 3 2 2 2 4 2 1 4 0 2

A19 0 1 6 3 3 1 2 1 3 3 3 4 5 4 2 0 3 2 0

A20 1 1 5 0 3 2 2 0 2 2 1 3 3 1 1 1 3 1 6

A20

A01 1

A02 1

A03 5

A04 0

A05 3

A06 2

A07 2

A08 0

A09 2

A10 2

A11 1

A12 3

A13 3

A14 1

A15 1

A16 1

A17 3

A18 1

A19 6

A20 0This is clearly an undirected network.

If we try to plot it without dichotomizing:

gplot(Wolfe_Primates$JointPresence)

We obtain a very dense network and, without specifying a position for the nodes, they are put at random.

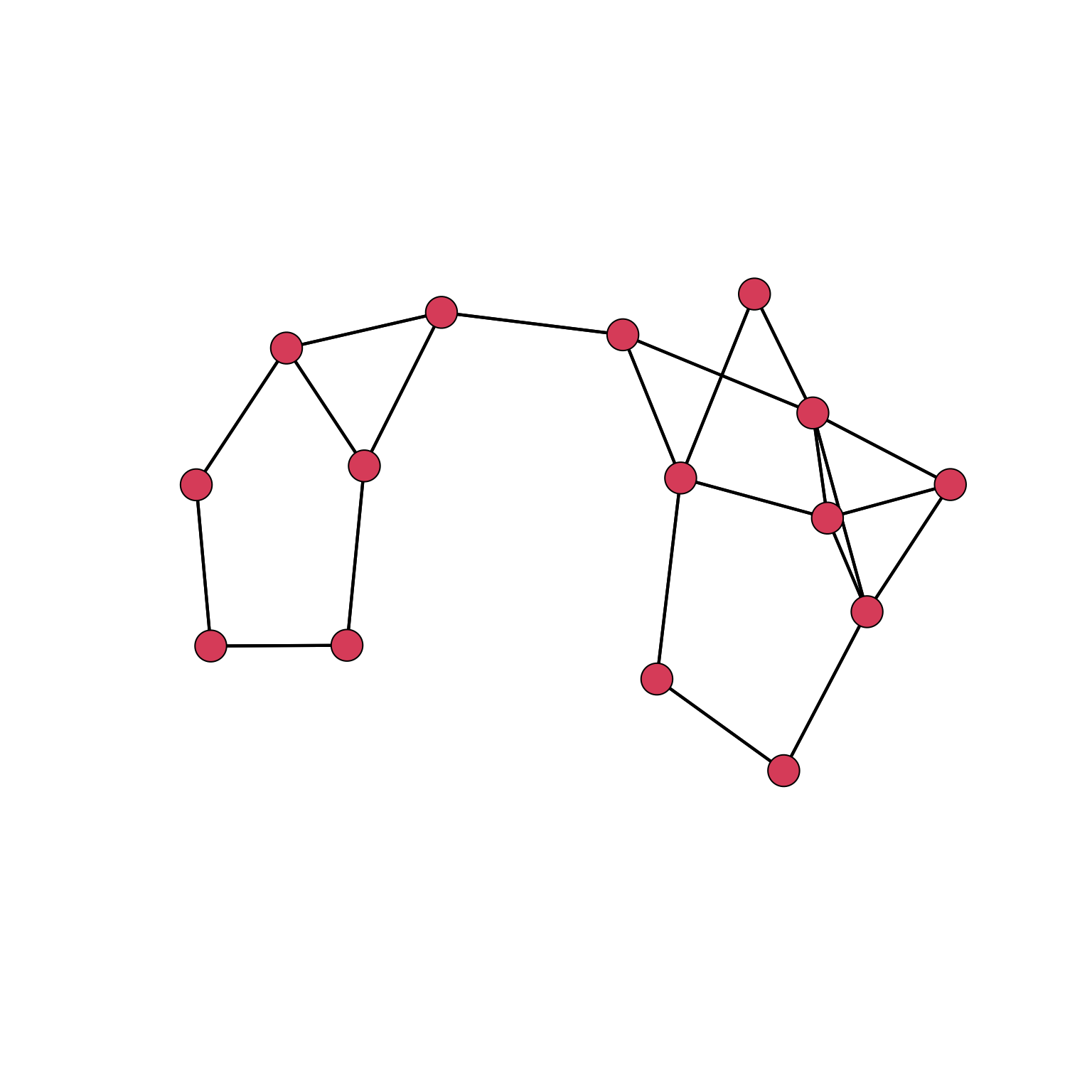

First, we need to find a good dichotomization threshold: 7 co-occurrences seems like a good level. To see why, let’s create a table of the connections:

table(Wolfe_Primates$JointPresence)

0 1 2 3 4 5 6 7 8 9 10 11 14 17

38 52 60 72 58 42 16 20 14 12 2 10 2 2 We are interested only in the most significant connections. The choice has to be made by making a strong theoretical assumption or by a sort of trial-and-error to check whether different thresholds might bring different insights. Be however sure that the results are not correlated with a particular visualization of the data.

par(mar = c(0, 0, 0, 0))

gplot(Wolfe_Primates$JointPresence>6, # NOTE: This is an an alternative way of dichotomizing "on the fly"

gmode="graph", # type of network: undirected

coord=Wolfe_Primates$Attributes[,c(2,4)], # coordinates to be use

vertex.cex=.8, # the size of the node shapes

vertex.col=(Wolfe_Primates$Attributes$Gender==1)*8, # a vector which is used to define the colour of each node (0=white and 8=grey)

displaylabels=TRUE, # to add the node labels

label.pos=1, # to position the labels below the node shapes

label.cex=.7, # to decrease the size of the node labels to 50% of the default

edge.col="grey70") # to make the colour of the ties/edges 30% black and 70% white

arrows(.2, .2, (max(Wolfe_Primates$Attributes$Age)+min(Wolfe_Primates$Attributes$Age))+.2, .2, length = 0.1)

arrows(.2, .2, .2, (max(Wolfe_Primates$Attributes[,2])+min(Wolfe_Primates$Attributes[,2])), length = 0.1)

text(0, (max(Wolfe_Primates$Attributes$Rank)+min(Wolfe_Primates$Attributes$Rank))-.5, labels="Rank",

cex=0.8, pos=4)

text((max(Wolfe_Primates$Attributes$Age)+min(Wolfe_Primates$Attributes$Age)),.2, labels="Age",

cex=0.8, pos=3)

Note that we are using age (y-axis) and rev_Rank (x-axis) as coordinates for nodes. Moreover, node colour is dependent on the gender; this is then a multivariate graphical representation of the graph.

We can see that older males are put on the top of the rank. Rank is correlated with age and gender is fundamental in detemining the position in the rank.

Using a nominal attribute to generate coordinates

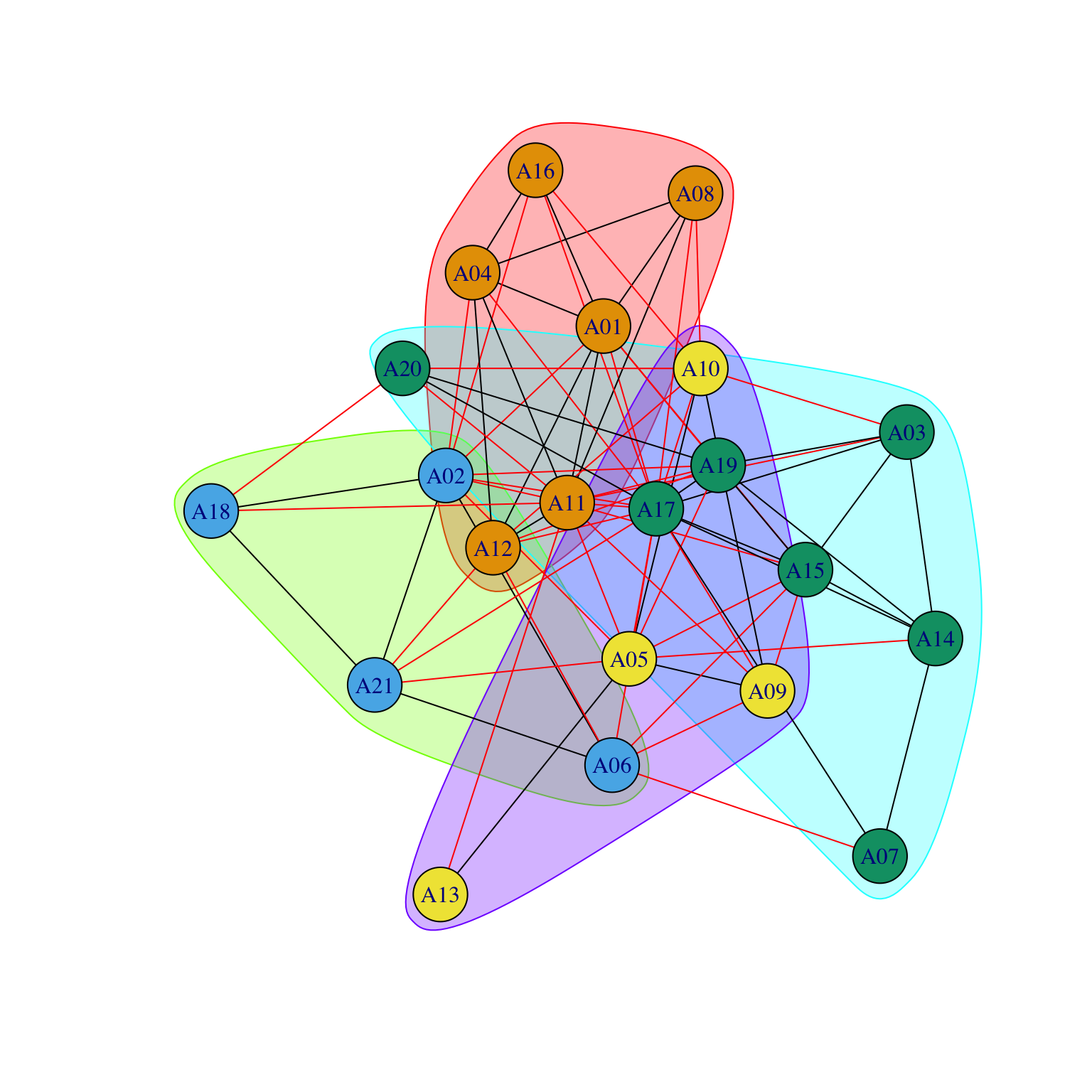

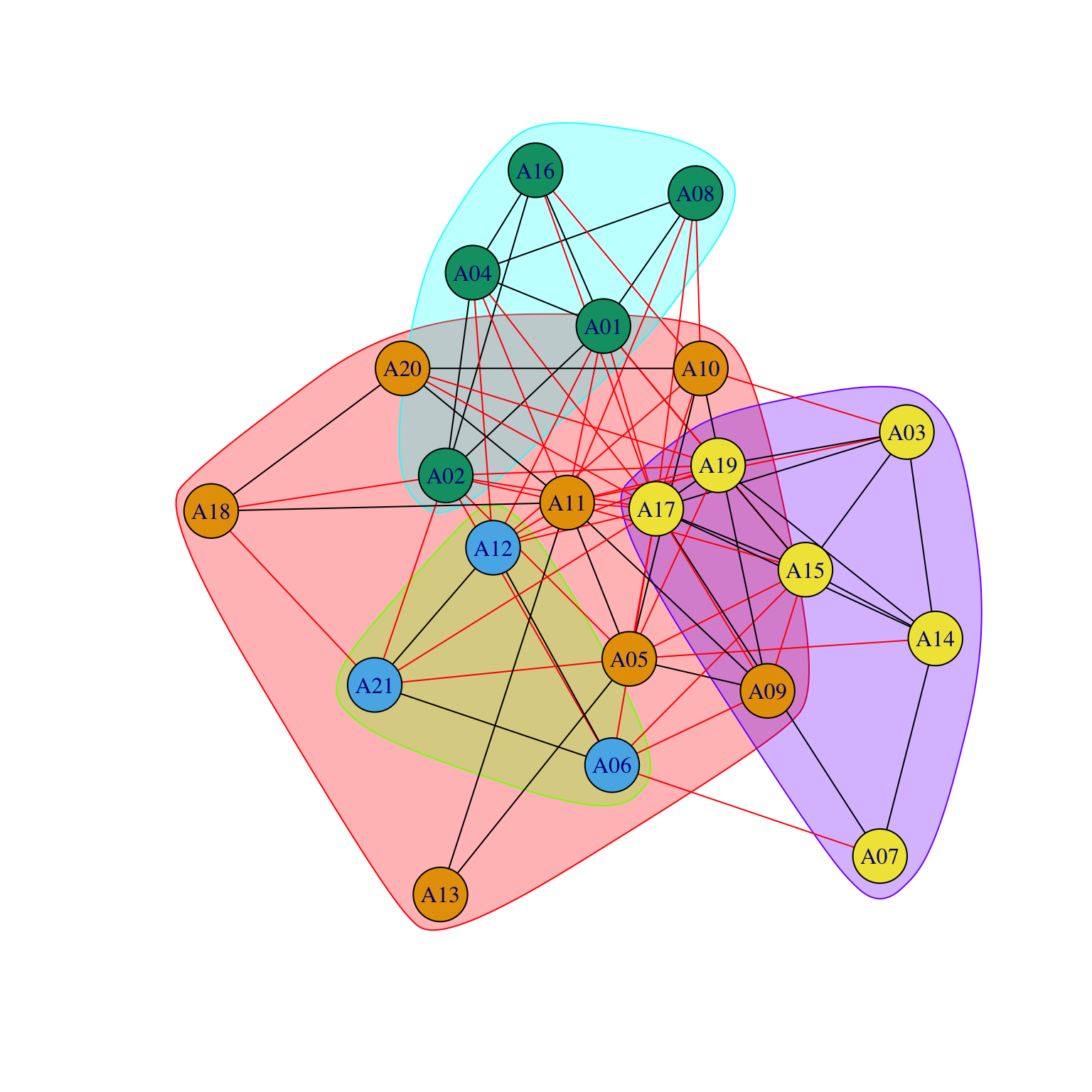

We will study the Krackhardt_HighTech: we wish to plot nodes belonging to the same department in the same group. When we want to use a nominal/categorical variable (such as Department) to position those nodes closer to each other who belong to the same category, we will use a trick. The default setting for plotting uses an algorithm that positions nodes close to each other if they are connected (see later). So what if we would create a matrix where we would have a value 1 when two nodes belong to the same category and 0 if they do not and we would use this to generate the positions of the nodes in the figure? We can then use these as coordinates for drawing the nodes in a graph containing the real network.

We first need to generate the matrix containing same department, which we will call MATRIX_A2:

#First, create a matrix with a list of departments

#and copy them in columns.

MATRIX_A1<-matrix(

Krackhardt_HighTech$Attributes$Department,

dim(

Krackhardt_HighTech$Friendship

)[1],

dim(

Krackhardt_HighTech$Friendship

)[1]

)

#if the two values are equal,

#it means that two people are in the same department.

MATRIX_A2<-(MATRIX_A1==t(MATRIX_A1))*1

MATRIX_A2 [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] [,11] [,12] [,13]

[1,] 1 1 0 1 0 0 0 0 0 0 0 0 0

[2,] 1 1 0 1 0 0 0 0 0 0 0 0 0

[3,] 0 0 1 0 1 0 0 0 1 0 0 0 1

[4,] 1 1 0 1 0 0 0 0 0 0 0 0 0

[5,] 0 0 1 0 1 0 0 0 1 0 0 0 1

[6,] 0 0 0 0 0 1 0 1 0 0 0 1 0

[7,] 0 0 0 0 0 0 1 0 0 0 0 0 0

[8,] 0 0 0 0 0 1 0 1 0 0 0 1 0

[9,] 0 0 1 0 1 0 0 0 1 0 0 0 1

[10,] 0 0 0 0 0 0 0 0 0 1 1 0 0

[11,] 0 0 0 0 0 0 0 0 0 1 1 0 0

[12,] 0 0 0 0 0 1 0 1 0 0 0 1 0

[13,] 0 0 1 0 1 0 0 0 1 0 0 0 1

[14,] 0 0 1 0 1 0 0 0 1 0 0 0 1

[15,] 0 0 1 0 1 0 0 0 1 0 0 0 1

[16,] 1 1 0 1 0 0 0 0 0 0 0 0 0

[17,] 0 0 0 0 0 1 0 1 0 0 0 1 0

[18,] 0 0 0 0 0 0 0 0 0 1 1 0 0

[19,] 0 0 1 0 1 0 0 0 1 0 0 0 1

[20,] 0 0 1 0 1 0 0 0 1 0 0 0 1

[21,] 0 0 0 0 0 1 0 1 0 0 0 1 0

[,14] [,15] [,16] [,17] [,18] [,19] [,20] [,21]

[1,] 0 0 1 0 0 0 0 0

[2,] 0 0 1 0 0 0 0 0

[3,] 1 1 0 0 0 1 1 0

[4,] 0 0 1 0 0 0 0 0

[5,] 1 1 0 0 0 1 1 0

[6,] 0 0 0 1 0 0 0 1

[7,] 0 0 0 0 0 0 0 0

[8,] 0 0 0 1 0 0 0 1

[9,] 1 1 0 0 0 1 1 0

[10,] 0 0 0 0 1 0 0 0

[11,] 0 0 0 0 1 0 0 0

[12,] 0 0 0 1 0 0 0 1

[13,] 1 1 0 0 0 1 1 0

[14,] 1 1 0 0 0 1 1 0

[15,] 1 1 0 0 0 1 1 0

[16,] 0 0 1 0 0 0 0 0

[17,] 0 0 0 1 0 0 0 1

[18,] 0 0 0 0 1 0 0 0

[19,] 1 1 0 0 0 1 1 0

[20,] 1 1 0 0 0 1 1 0

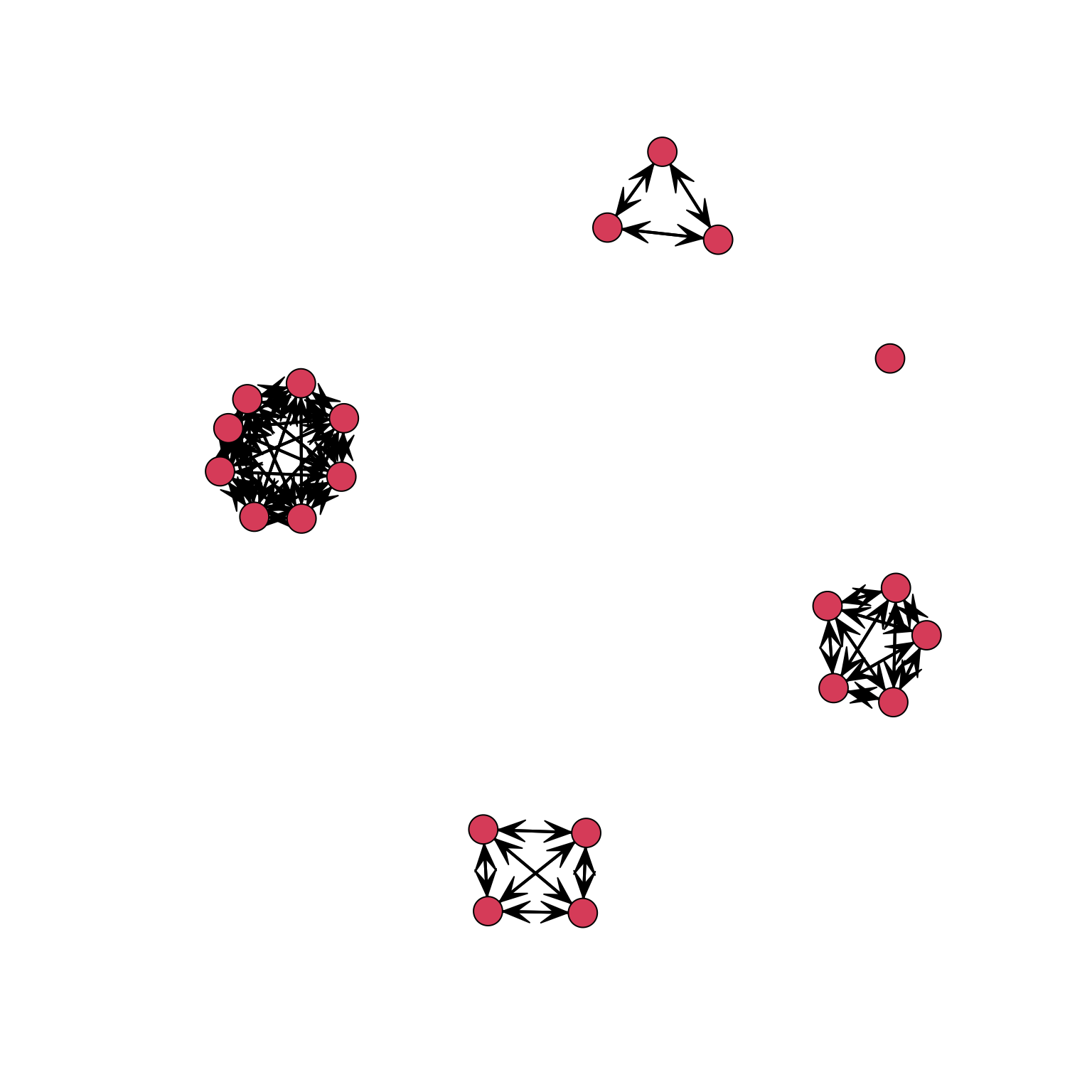

[21,] 0 0 0 1 0 0 0 1We now generate the coordinates using the new matrix MATRIX_A2 and store the coordinates of the graph as a new object (COORD_A2):

COORD_A2 <- gplot(MATRIX_A2)

As we can see in the graph, the result is what we wanted: nodes from the same department are positioned close to each other. We now can use these coordinates (stored in the object COORD_A2) in the same way we used two continuous attributes before to define the x and y axes (coord=COORD_A2) and obtain a graph for the friendship network where nodes are positioned according to department:

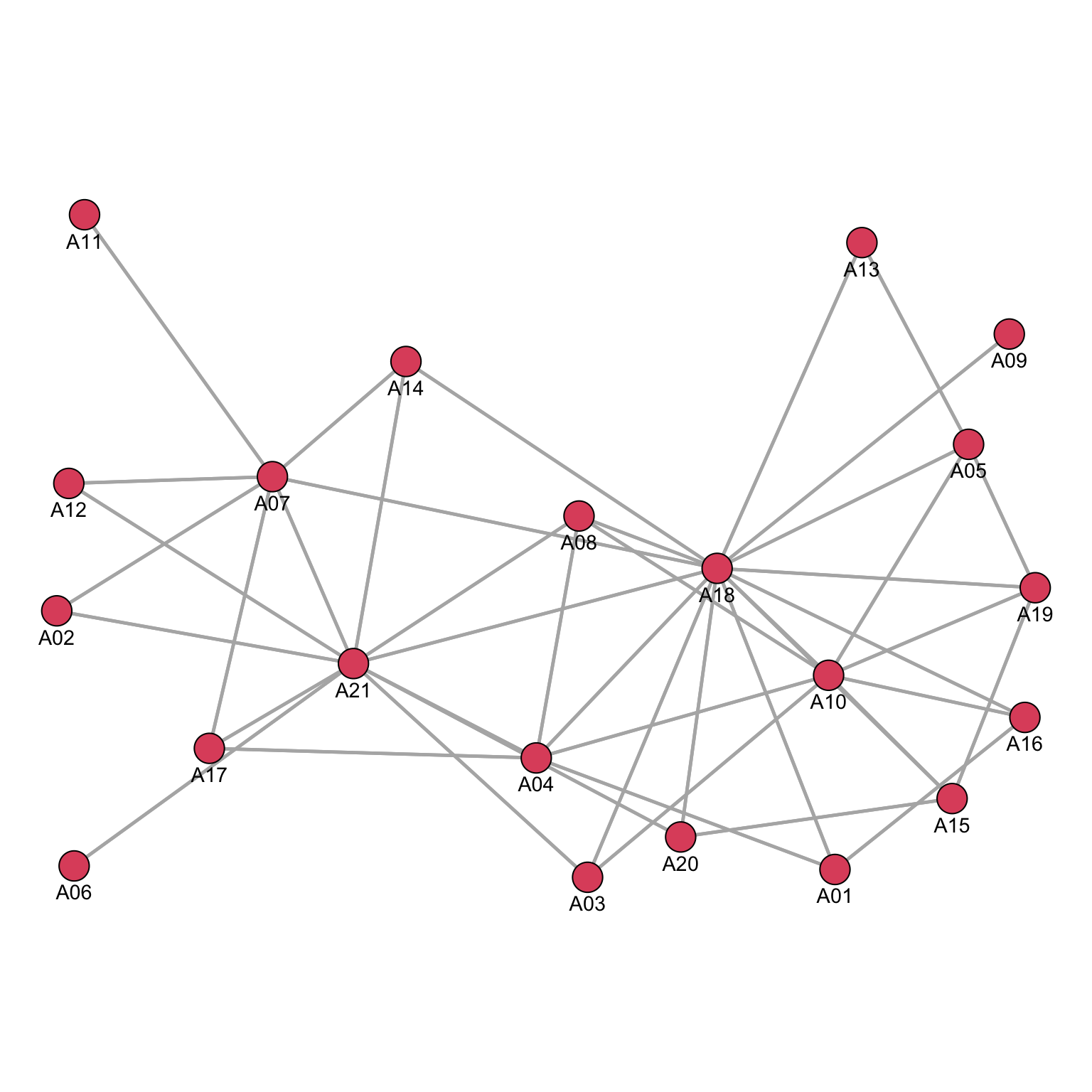

par(mar = c(0, 0, 0, 0))

gplot((Krackhardt_HighTech$Friendship)*t(Krackhardt_HighTech$Friendship),

gmode="graph",

coord=COORD_A2,

vertex.cex=.8,

displaylabels=TRUE,

label.pos=1,

label.cex=.7,

edge.col="grey70")

While the nodes did not move, we can now visualize the relationship among departments. For example, we can now see that A11 is a fundamental element in establishing connections among separated departments.

We could use the same approach to plot another network: the advice network.

par(mar = c(0, 0, 0, 0))

gplot((Krackhardt_HighTech$Advice)*t(Krackhardt_HighTech$Advice),

gmode="graph",

coord=COORD_A2,

vertex.cex=.8,

displaylabels=TRUE,

label.pos=1,

label.cex=.7,

edge.col="grey70")

By using the same coordinates, we can compare and contrast the flow of information among different networks.

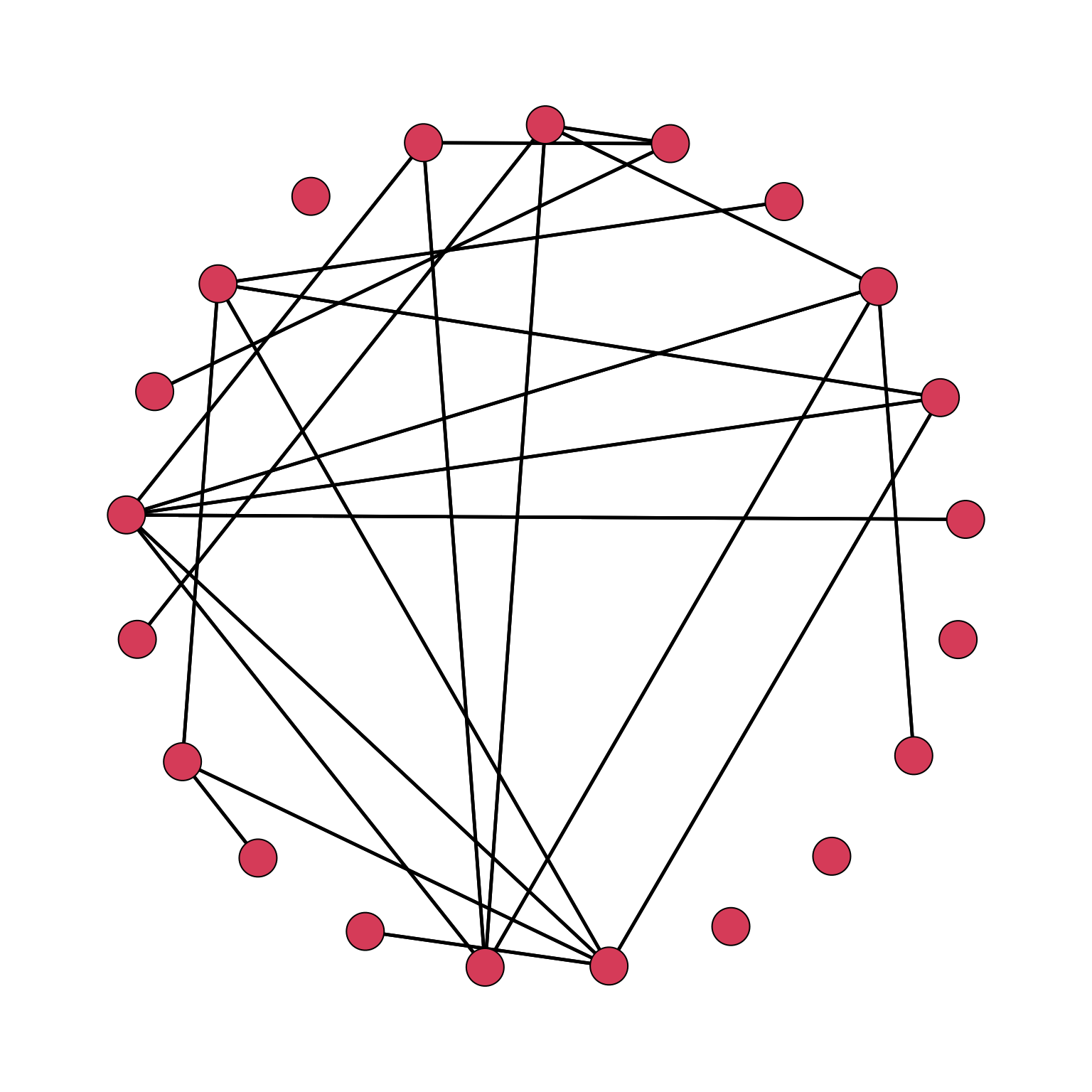

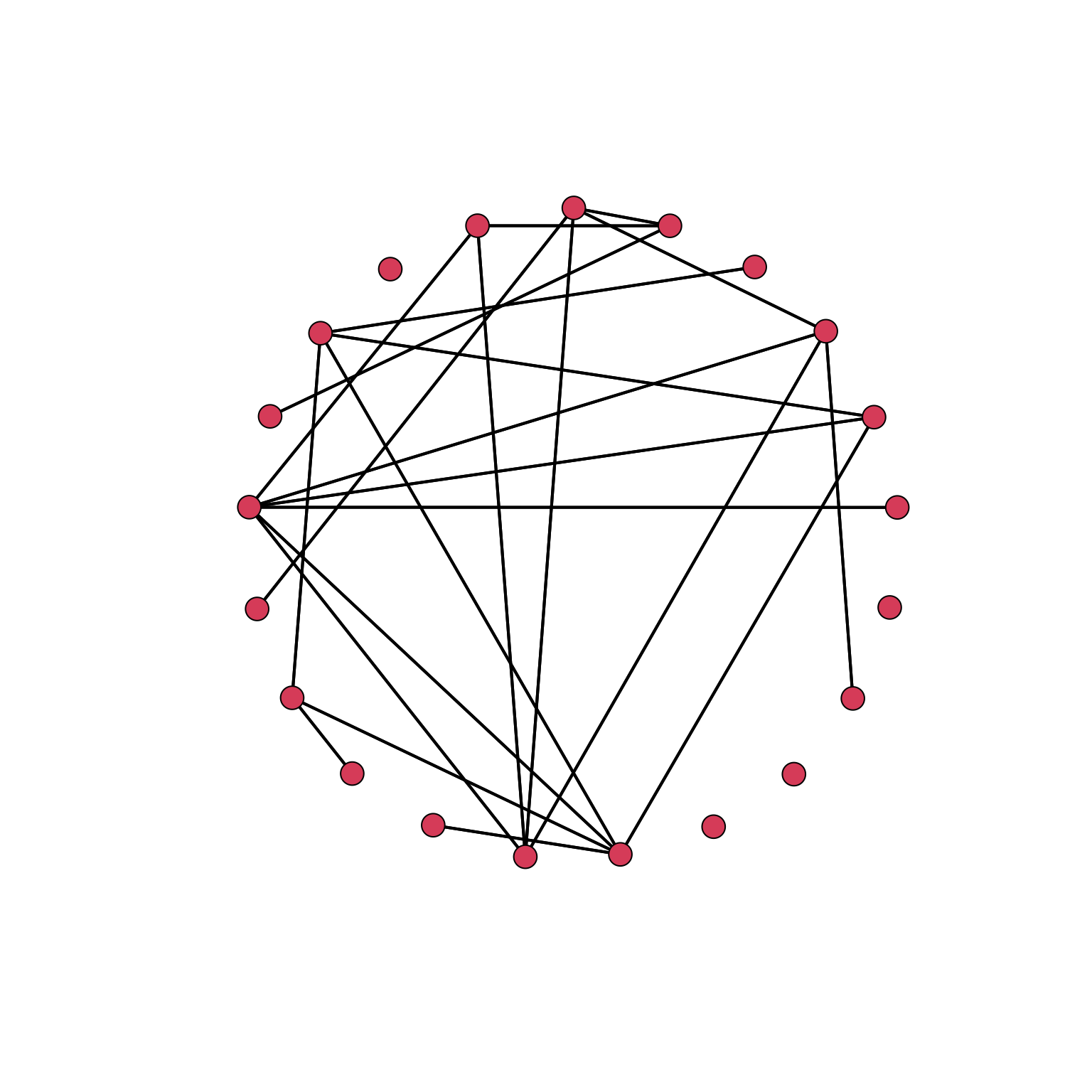

Plotting with optimization algorithms

Fruchterman and Reingold (1991) is the default in SNA package:

par(mar = c(0, 0, 0, 0))

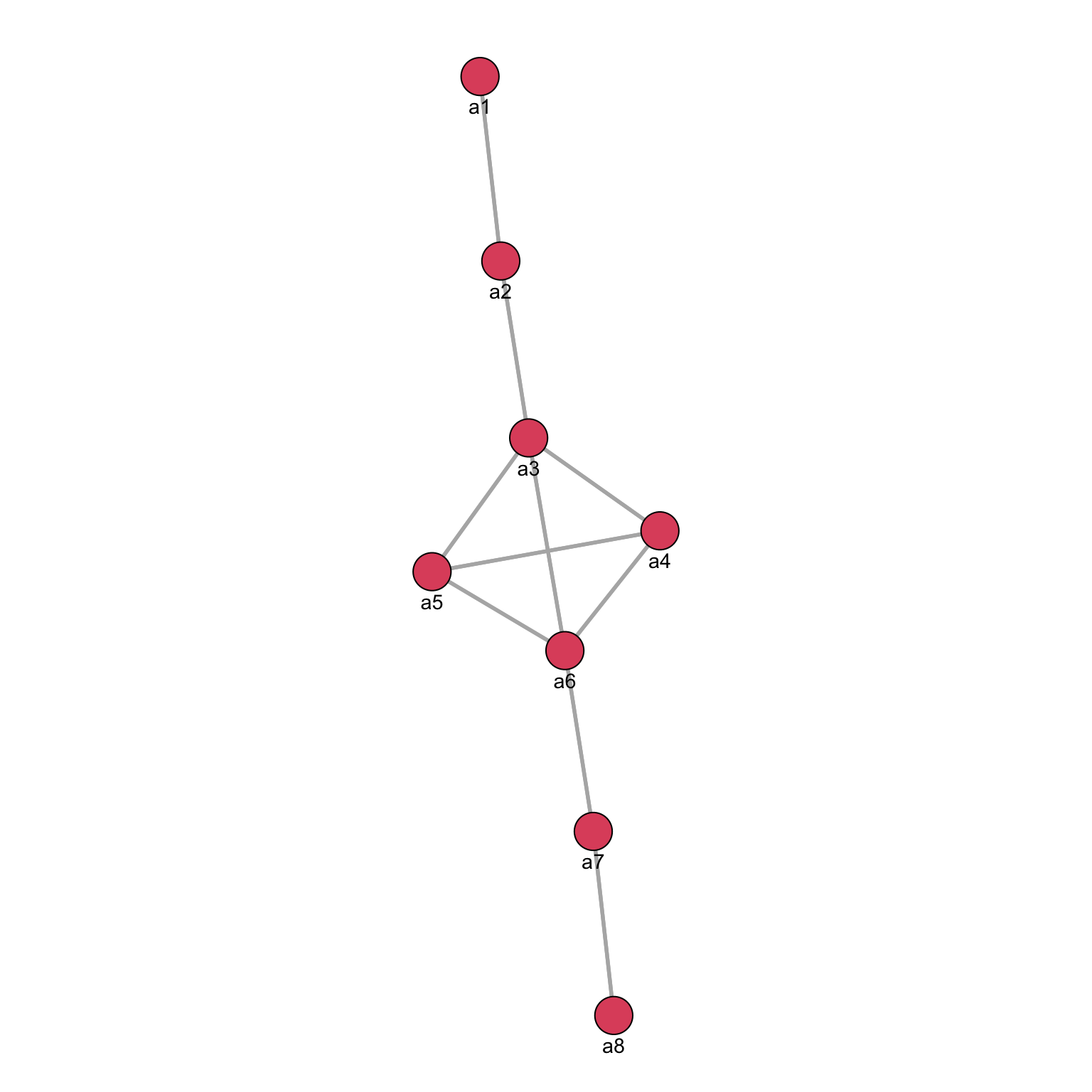

gplot(ASNR_Fig07x11,

gmode="graph",

vertex.cex=.8,

displaylabels=TRUE,

label.pos=1,

label.cex=.9,

edge.col="grey70")

mode="kamadakawai":

par(mar = c(0, 0, 0, 0))

gplot(ASNR_Fig07x11,

mode="kamadakawai",

gmode="graph",

vertex.cex=.8,

displaylabels=TRUE,

label.pos=1,

label.cex=.9,

edge.col="grey70")

There is not a best algorithm: it all depends on your data and objective.

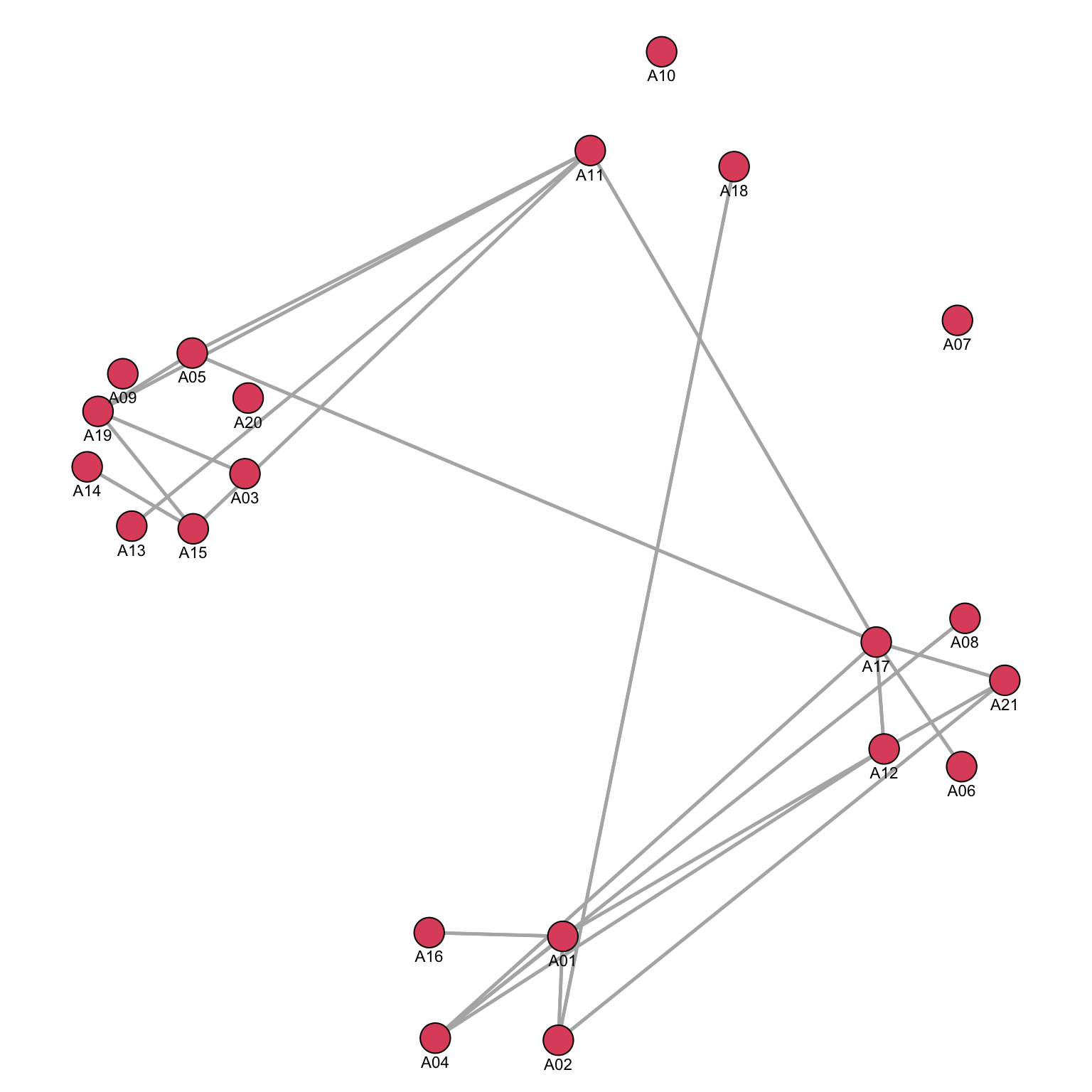

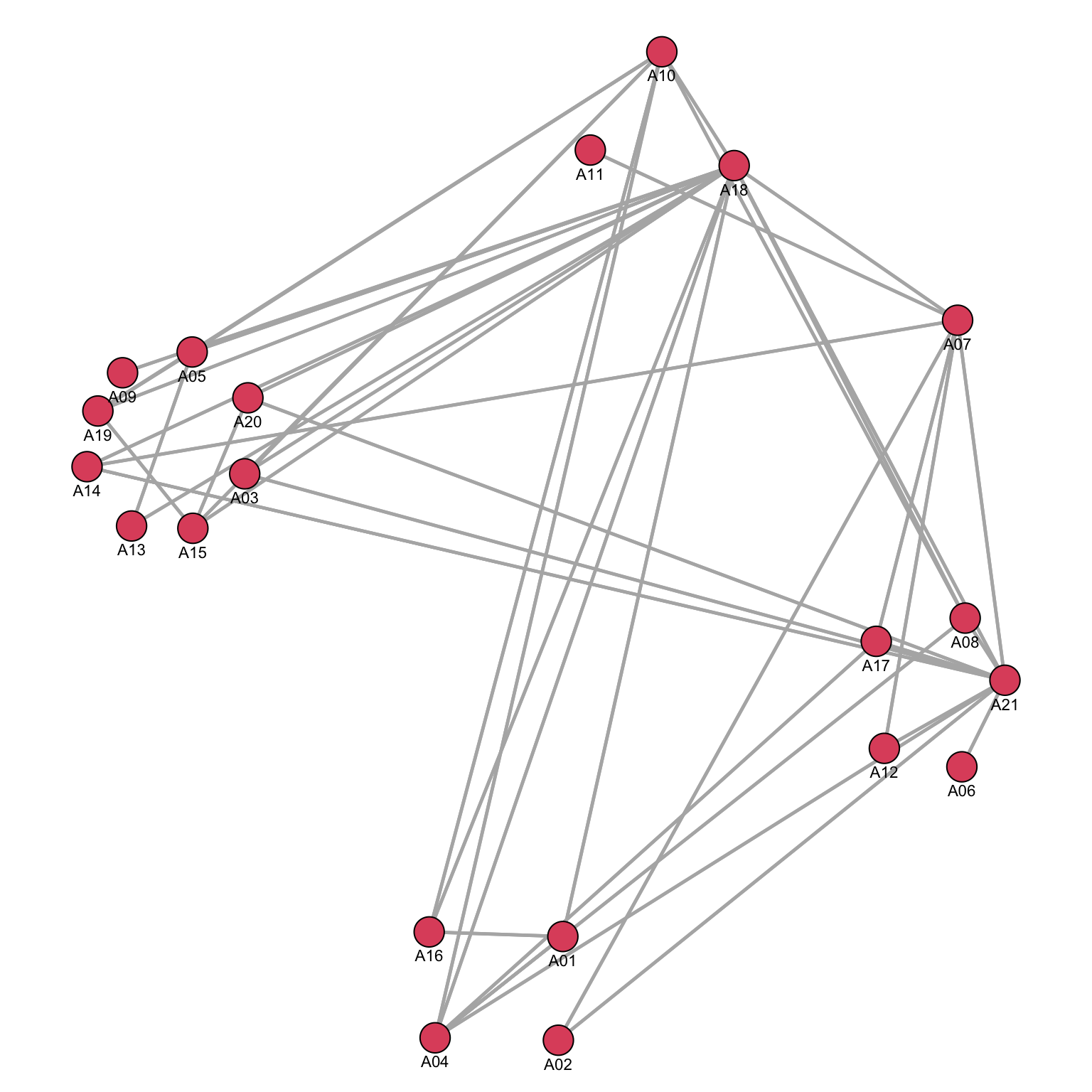

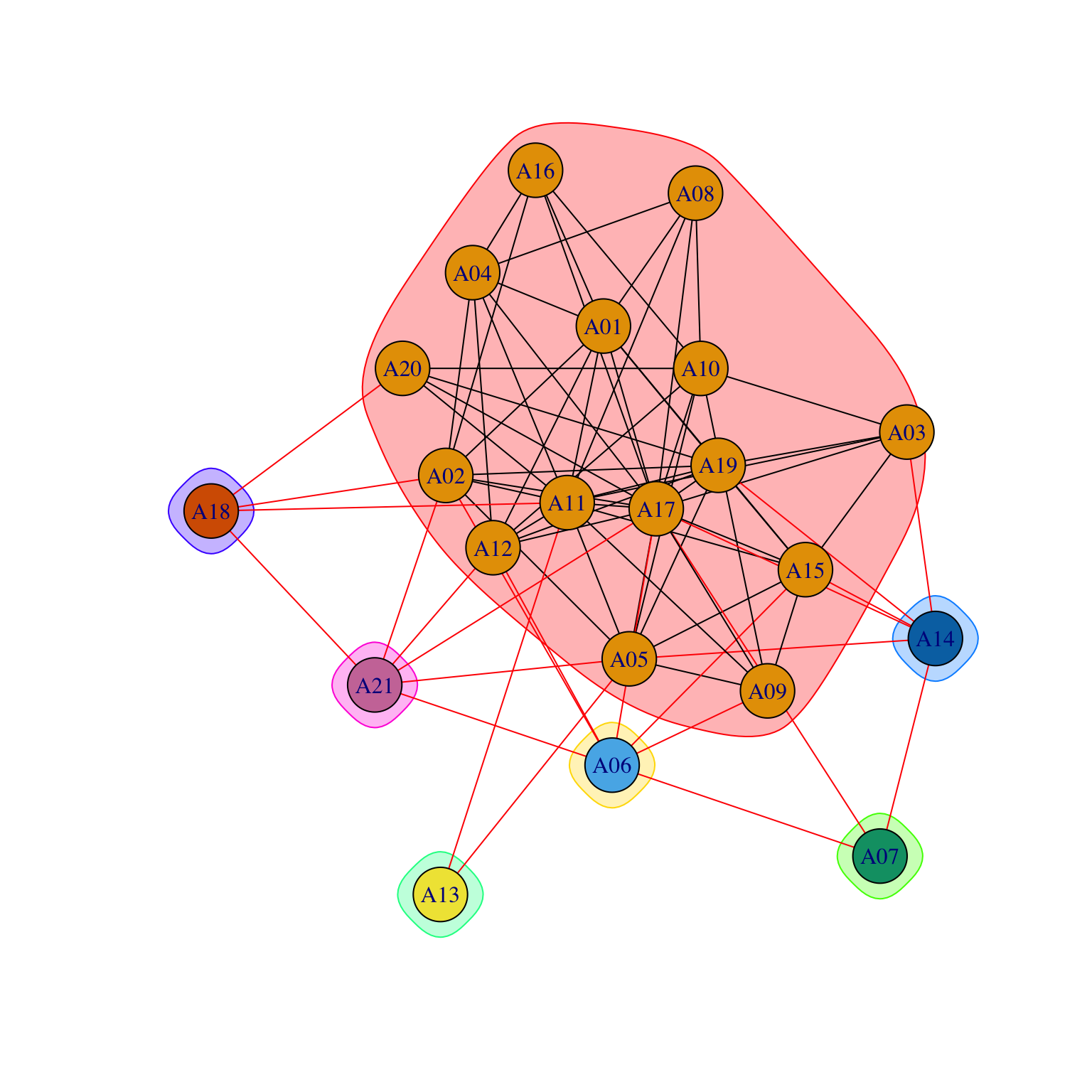

They usually help with more complex networks:

par(mar = c(0, 0, 0, 0))

gplot(Krackhardt_HighTech$Friendship*t(Krackhardt_HighTech$Friendship),

gmode="graph",

vertex.cex=.8,

displaylabels=TRUE,

label.pos=1,

label.cex=.9,

edge.col="grey70")

But, with the advice network, visualization is far less useful.

par(mar = c(0, 0, 0, 0))

gplot(Krackhardt_HighTech$Advice*t(Krackhardt_HighTech$Advice),

gmode="graph",

vertex.cex=.8,

displaylabels=TRUE,

label.pos=1,

label.cex=.9,

edge.col="grey70")

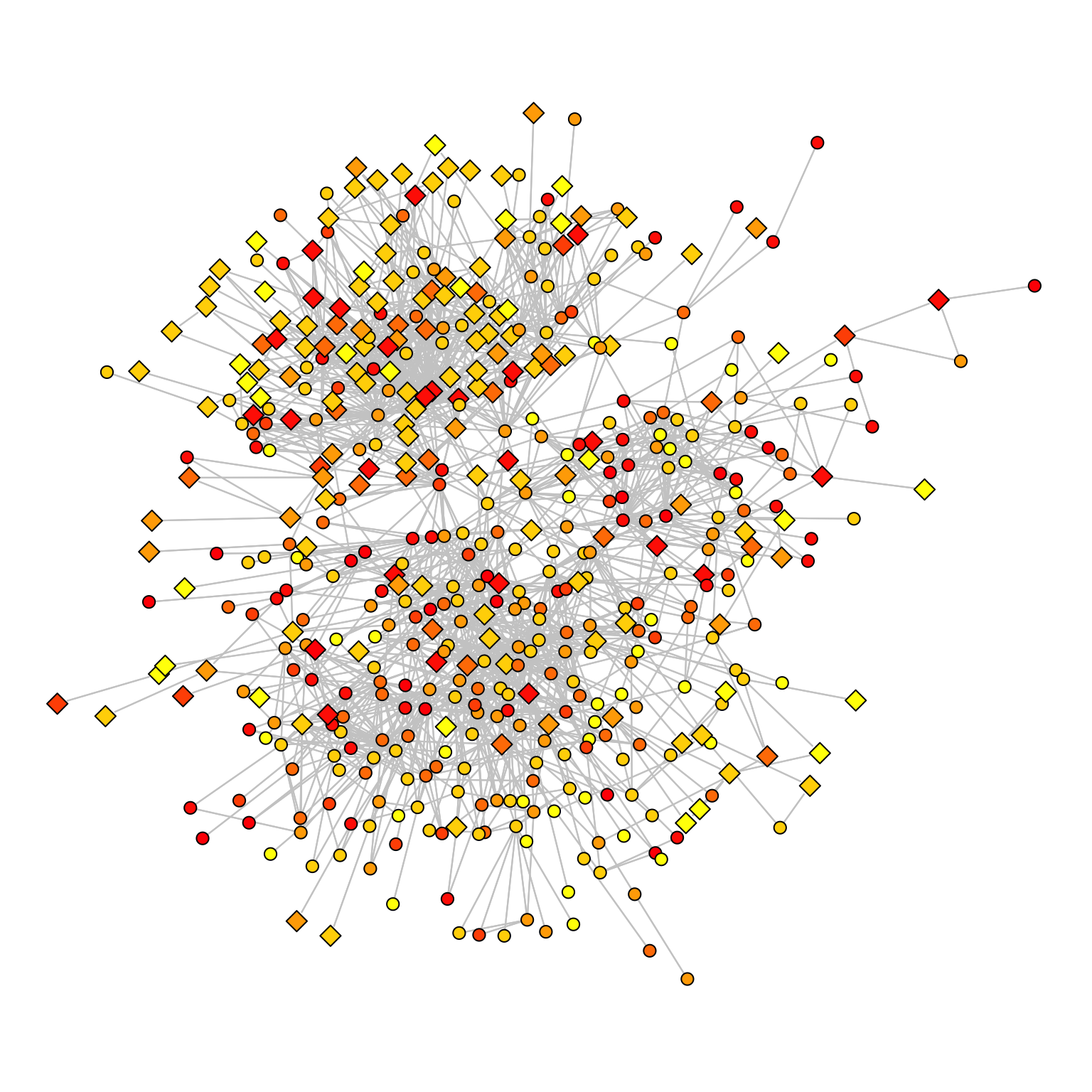

Let’s see an example with a large collaboration network:

par(mar = c(0, 0, 0, 0))

gplot(Borgatti_Scientists504$Collaboration>3,

mode="kamadakawai",

gmode="graph",

vertex.cex=.8,

edge.col="grey70")

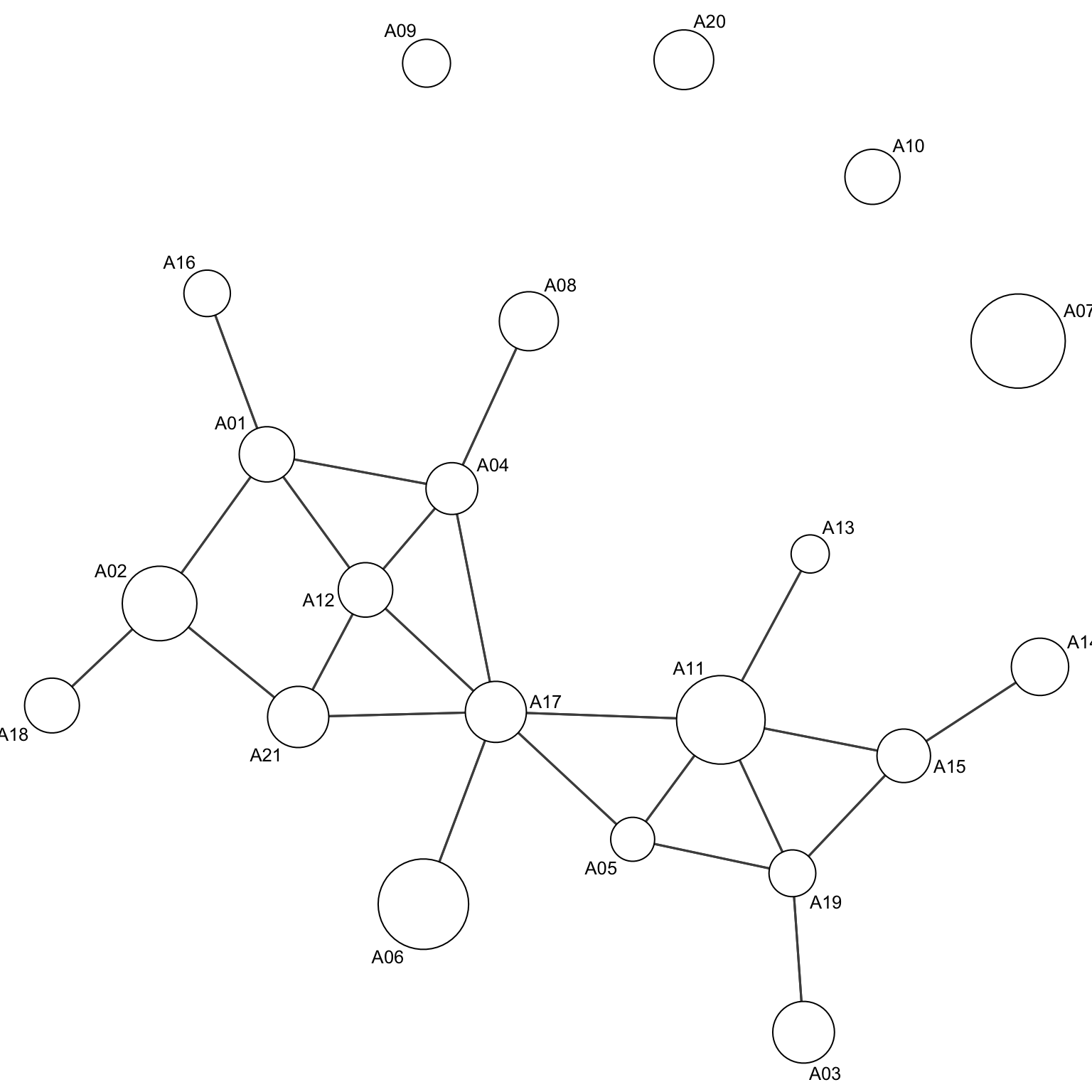

Embedding node attributes

When we analyse a graph visually, we might want to include as much information as possible in the visual representation. There are a few tricks which can be used to include further dimensionality of a graph. We might want to add information about the nodes:

- Shapes

- Size of the dots

- Colours

- Labels (again, size and colour)

par(mar=c(0,0,0,0))

gplot(Krackhardt_HighTech$Friendship_SymMin,

gmode="graph",

edge.col="grey30",

edge.lwd=.4,

vertex.cex=Krackhardt_HighTech$Attributes$Tenure/20+1, # node dimension is dependent on an attribute

vertex.col="white",

displaylabels=TRUE,

label.cex=.8)

Here, node dimension is a function of the tenure.

Categorical variables can be represented by colours and shapes:

par(mar=c(0,0,0,0))

gplot(Krackhardt_HighTech$Friendship_SymMin,

gmode="graph",

mode="fruchtermanreingold",

jitter=F,

edge.col="grey30",

edge.lwd=.4,

vertex.cex=Krackhardt_HighTech$Attributes$Tenure/20+1,

vertex.col=1+(Krackhardt_HighTech$Attributes$Level==2)+(Krackhardt_HighTech$Attributes$Level==3)*-1,

vertex.sides=3+(Krackhardt_HighTech$Attributes$Level==2)+(Krackhardt_HighTech$Attributes$Level==3)*50,

displaylabels=TRUE,

label.cex=.8)

Representing valued networks

A useful computation might consist in adding values to the ties regarding weights. We will use the colorRampPalette to create a palette of colours to visualize information; in our case, we will extract and use tenure years and visualize it.

Tenure <- (Borgatti_Scientists504$Attributes$Years)

# I normalize and discretize the tenure levels between 0 and 10

TenureA <- (round(10*(Tenure)/max((Tenure))))

# return functions that interpolate a set of given colours to create new colour palettes

colfunc <- colorRampPalette(c("yellow", "red"))

COLWB <- colfunc(7)The colorRampPalette takes two values as the extreme of your range of colours and interpolates the intermediate colours in the range. We can assign a colour to each level of the variable:

TenureA2 <- TenureA

TenureA2[TenureA==0] <- COLWB[1]

TenureA2[TenureA==1] <- COLWB[2]

TenureA2[TenureA==2] <- COLWB[3]

TenureA2[TenureA==3] <- COLWB[4]

TenureA2[TenureA==4] <- COLWB[5]

TenureA2[TenureA==5] <- COLWB[6]

TenureA2[TenureA==6] <- COLWB[6]

TenureA2[TenureA==7] <- COLWB[7]

TenureA2[TenureA==8] <- COLWB[7]

TenureA2[TenureA==9] <- COLWB[7]

TenureA2[TenureA==10] <- COLWB[7]We can then plot the graph using the colour to add another dimension to the visualization:

par(mar=c(0,0,0,0))

gplot(Borgatti_Scientists504$Collaboration>3,

mode="kamadakawai",

gmode="graph",

edge.col="grey80",

edge.lwd=.2,

vertex.col=TenureA2,

vertex.cex=1+.7*(2-Borgatti_Scientists504$Attributes$Sex),

vertex.sides=(Borgatti_Scientists504$Attributes$Sex-1)*47+4)

Note that the vertex shapes and sizes show the sex.

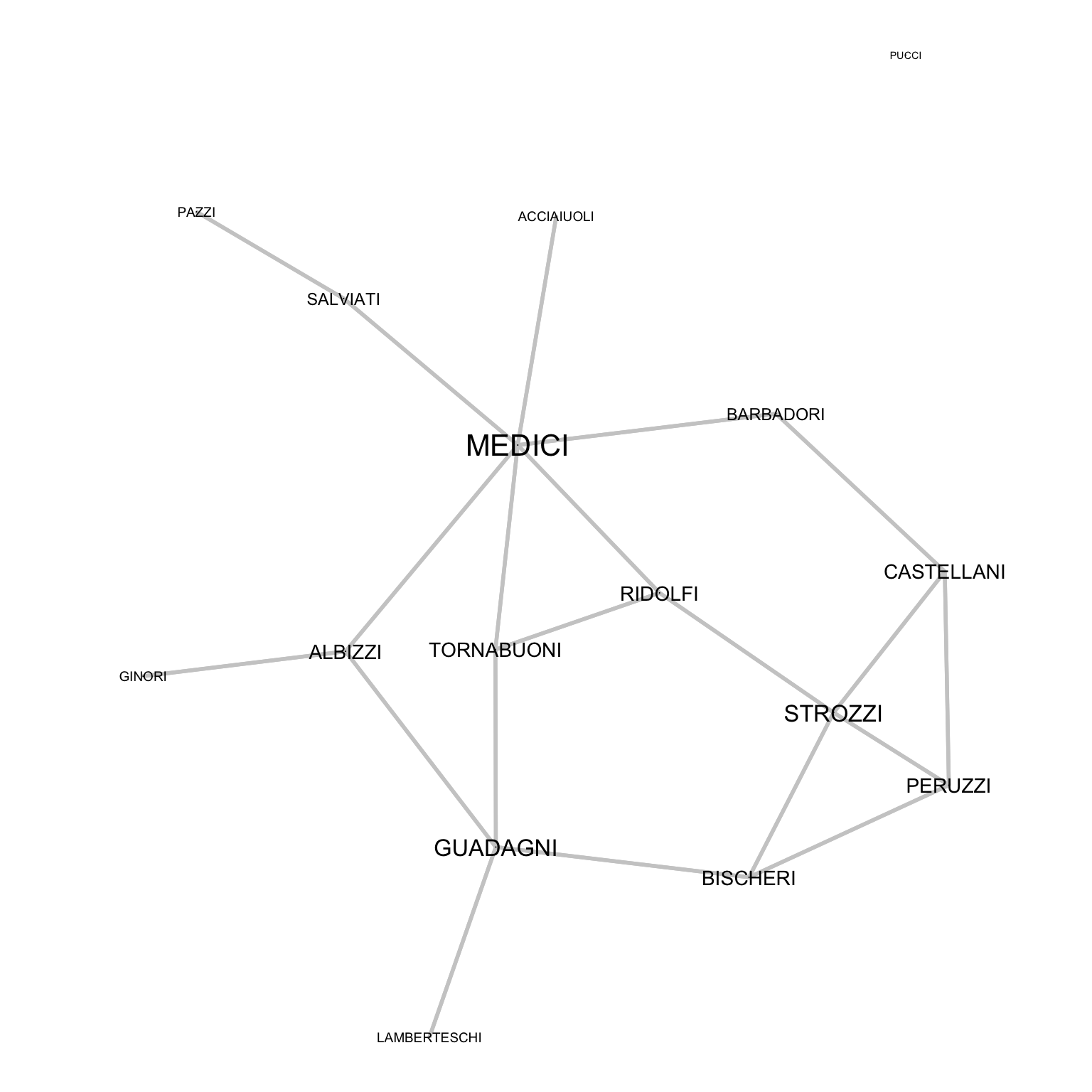

Padgett shows that Medici centrality in a series of networks (trade, marriages) explains their raise to power: relying on degree centrality, we can define the size of the labels with label.cex. To increase visibility we removed the node shapes. To compute the degree, we use the sna::degree function, which will in this case return the number of marriages that connects families as the size of the node.

par(mar=c(0,0,0,0))

PFM_Degree<-sna::degree(Padgett_FlorentineFamilies$Marriage)

gplot(Padgett_FlorentineFamilies$Marriage,

gmode="graph",

edge.col="grey80",

edge.lwd=1.2,

vertex.cex=0,

vertex.col="white",

displaylabels=TRUE,

label.pos=5,

label.cex=PFM_Degree/14+.45)

To effectively substitute the nodes, they are set with dimension 0, allowing labels to substitute them.

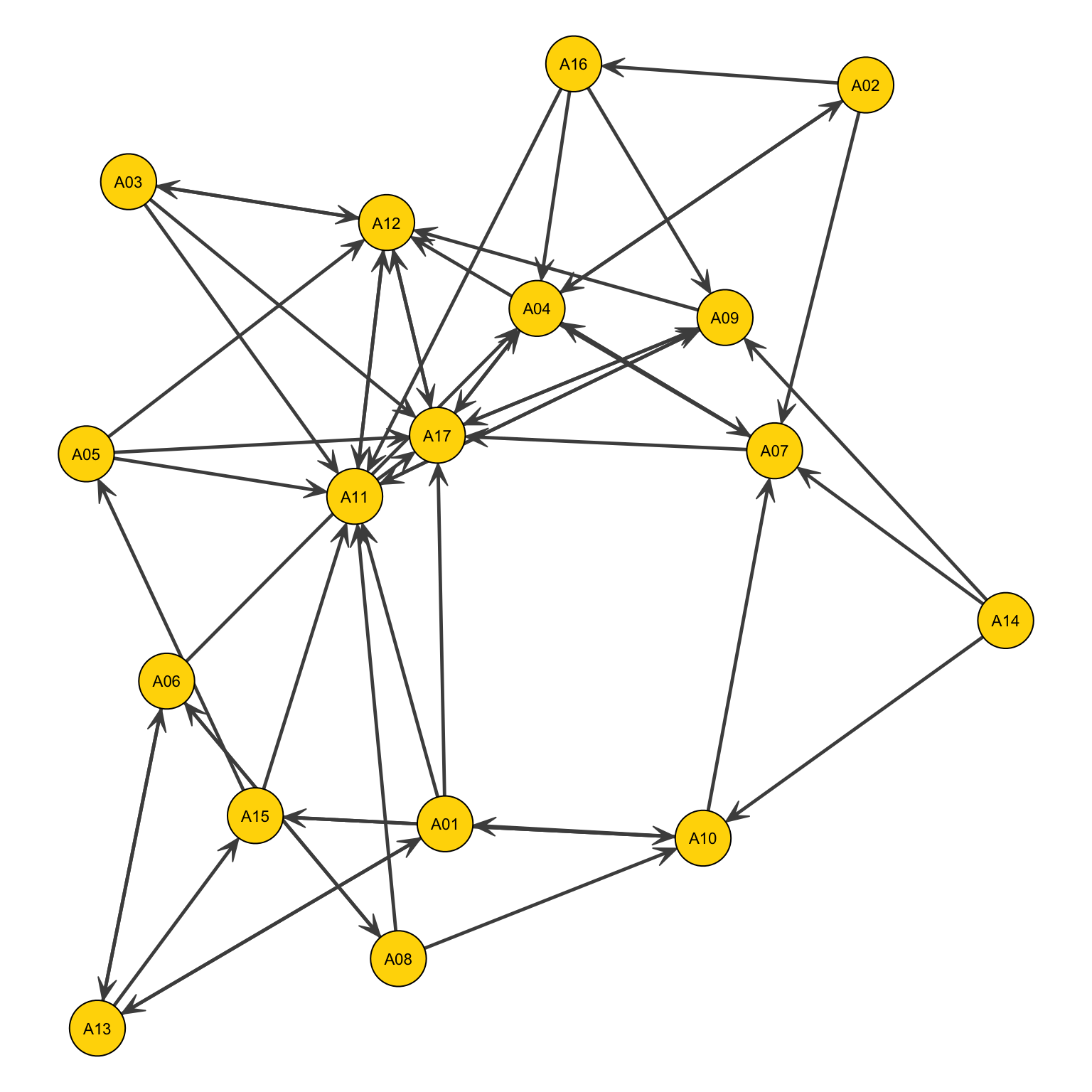

Also ties can represent useful information. In this case ties that go in two directions can be represented as straight lines with two arrows:

par(mar=c(0,0,0,0))

gplot(Newcomb_Fraternity$PreferenceT00<4,

gmode="digraph", # not that we changed the mode

#layout

jitter=F,

#ties

edge.col="grey30",

edge.lwd=1,

arrowhead.cex=.6,

#nodes

vertex.col="gold",

vertex.cex=1.4,

#labels

displaylabels=TRUE,

label.pos=5,

label.cex=.7)

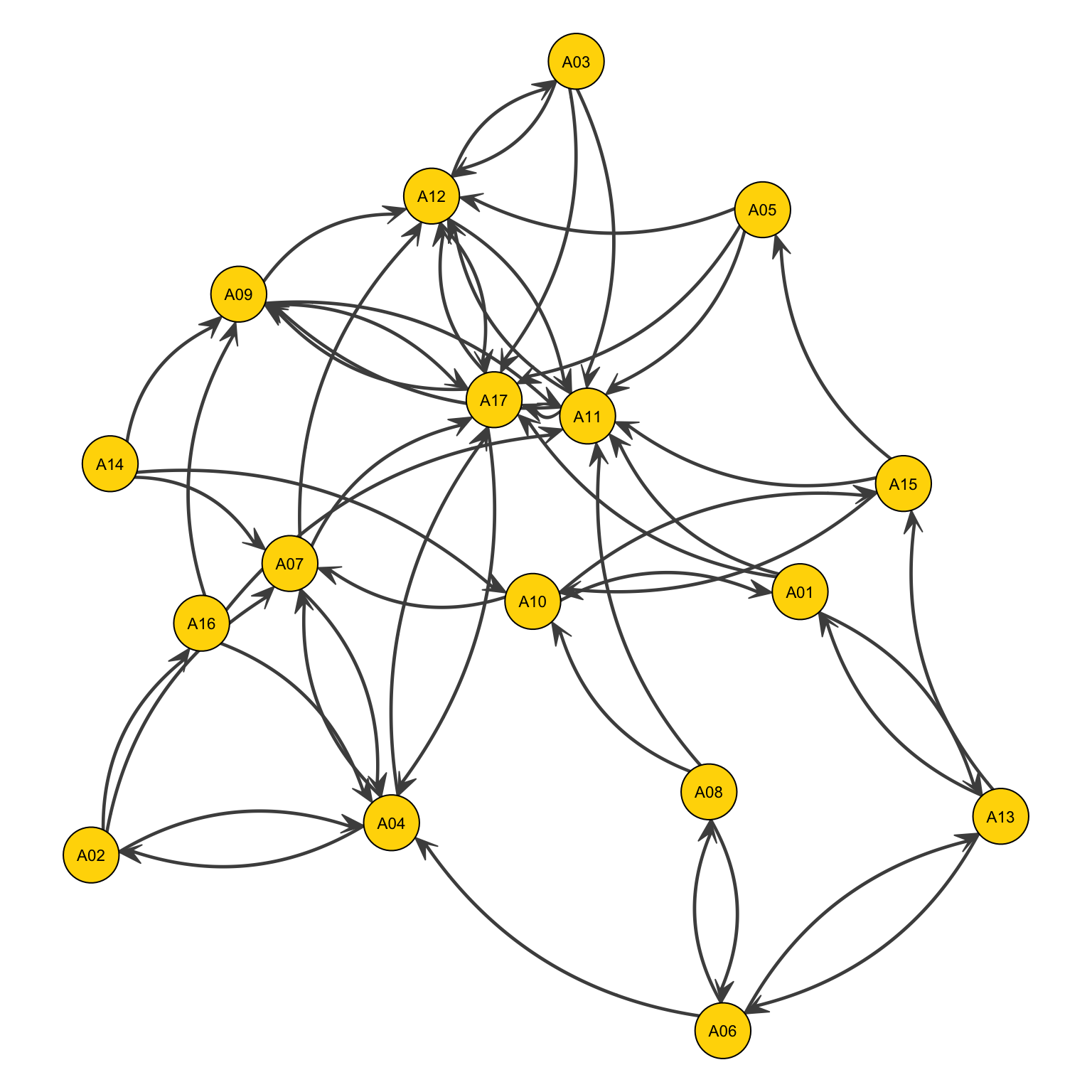

Or by two curvy lines

par(mar=c(0,0,0,0))

gplot(Newcomb_Fraternity$PreferenceT00<4,

gmode="digraph",

#layout

jitter = F,

#ties

edge.col="grey30",

edge.lwd=1,

usecurve=T, # Adding this parameter

edge.curve=.09,

arrowhead.cex=.6,

#nodes

vertex.col="gold",

vertex.cex=1.4,

#labels

displaylabels=TRUE,

label.pos=5,

label.cex=.7)

One last thing. Frequently a project requires to plot multiple types of ties in the same network; for example, to represent both antagonistic or friendly relationship among a network of people. This kind of information is usually stored in different networks, which are then stored in different adjacency matrices.

HBG <- Hawthorne_BankWiring$Game # Positive ties

HBA <- Hawthorne_BankWiring$Antagonistic # Negative tiesWe need to rewrite the code so that: if the relation is only positive, it has colour green (3), only negative has colour red (2), while both is black (1).

HB <- (2*HBG+HBA)

HBc <- (HBA==1)*(HBG==1)*1+(HBA==0)*(HBG==1)*3+(HBA==1)*(HBG==0)*2

#Change tie width so it is also visible in black & white

HBl <- (HBA==1)*(HBG==1)*5+(HBA==0)*(HBG==1)*3+(HBA==1)*(HBG==0)*1I am now ready to represent the network:

par(mar=c(0,0,0,0))

gplot(HB, jitter = F, gmode="graph", displaylabels=TRUE, label.pos=5,

vertex.col="grey90", edge.lwd=HBl,

label.cex=.7, vertex.cex=1.4, edge.col=HBc)

Statistical properties of networks

These statistical properties are used to characterize networks.

- Monadic (e.g. concerning the single nodes):

- degree

- centrality measures

- Dyadic (e.g. concerning the relationship between two nodes):

- path,

- walks,

- connectivity

- That concern groups of nodes:

- connected components

- clustering

- assortativity

- communities

- average shortest path

- diameter

Monadic

We are talking about the characterization of nodes.

We use the degree, : the number of edges incident to a vertex.

- a node with is isolated.

- a node with is a leaf, or terminal node.

- a node with is an hub.

For directed networks:

- In-degree: the number of directed edges that point to a vertex.

- Out-degree: the number of directed edges that start from a vertex.

For undirected, unweighed networks, the degree of a node is the row-sum (or, equivalently, the column-sum) of its rows/columns in the adjacency matrix.

For undirected, weighted networks, the degree of a node is the row-sum (or equivalently the column-sum) of the non-zero elements in its row (or column) in the adjacency matrix.

- Summing the weights on a row (column) we can also get the so-called Node Strength.

For directed networks:

- the out-degree is the row-sum of their row in the adjacency matrix.

- the in-degree is the column-sum of their column in the adjacency matrix.

To compute the degree in R:

xDegreeCentrality(Krackhardt_HighTech$Advice) Degree nDegree

A01 6 0.30

A02 3 0.15

A03 15 0.75

A04 12 0.60

A05 15 0.75

A06 1 0.05

A07 8 0.40

A08 8 0.40

A09 13 0.65

A10 14 0.70

A11 3 0.15

A12 2 0.10

A13 6 0.30

A14 4 0.20

A15 20 1.00

A16 4 0.20

A17 5 0.25

A18 17 0.85

A19 11 0.55

A20 12 0.60

A21 11 0.55This command prints the origin and each degree; moreover, we also have the average strength of the connection of that node associated to it. Note that in undirected network, it automatically computes the out-degree; to obtain the in-degree, we need to first transpose the matrix.

xDegreeCentrality(t(Krackhardt_HighTech$Advice)) Degree nDegree

A01 13 0.65

A02 18 0.90

A03 5 0.25

A04 8 0.40

A05 5 0.25

A06 10 0.50

A07 13 0.65

A08 10 0.50

A09 4 0.20

A10 9 0.45

A11 11 0.55

A12 7 0.35

A13 4 0.20

A14 10 0.50

A15 4 0.20

A16 8 0.40

A17 9 0.45

A18 15 0.75

A19 4 0.20

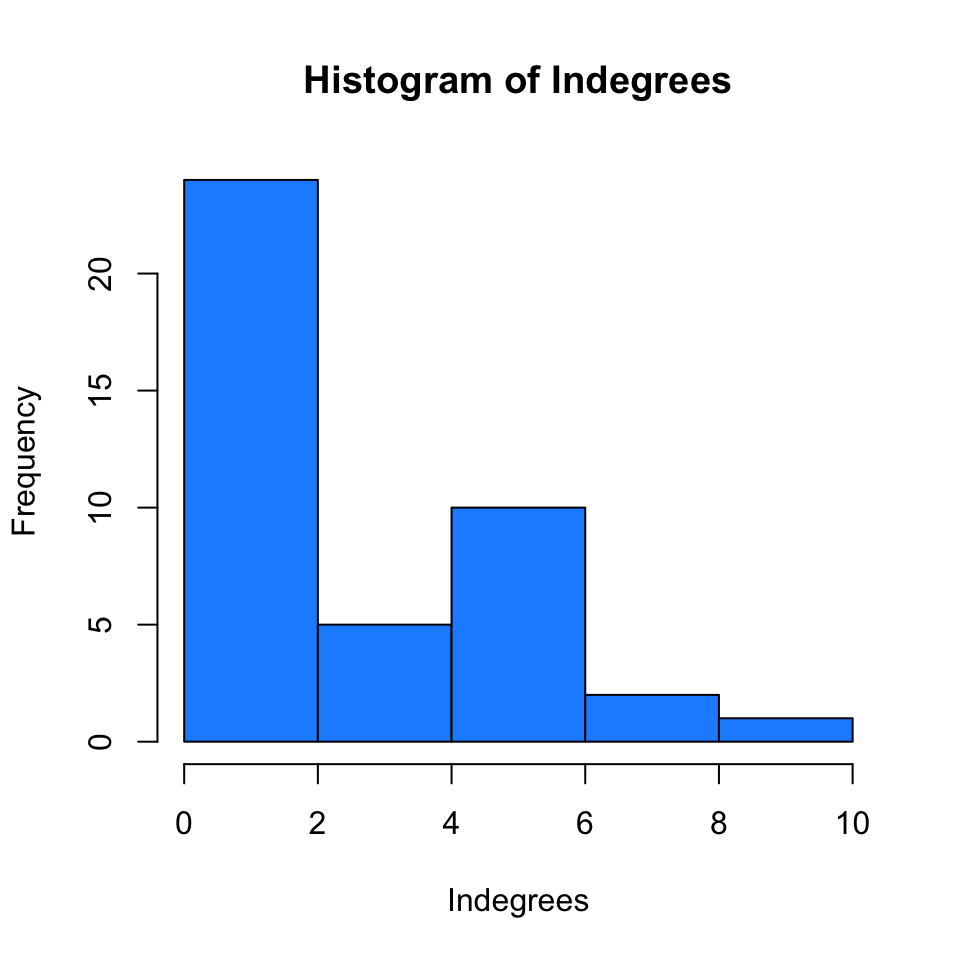

A20 8 0.40

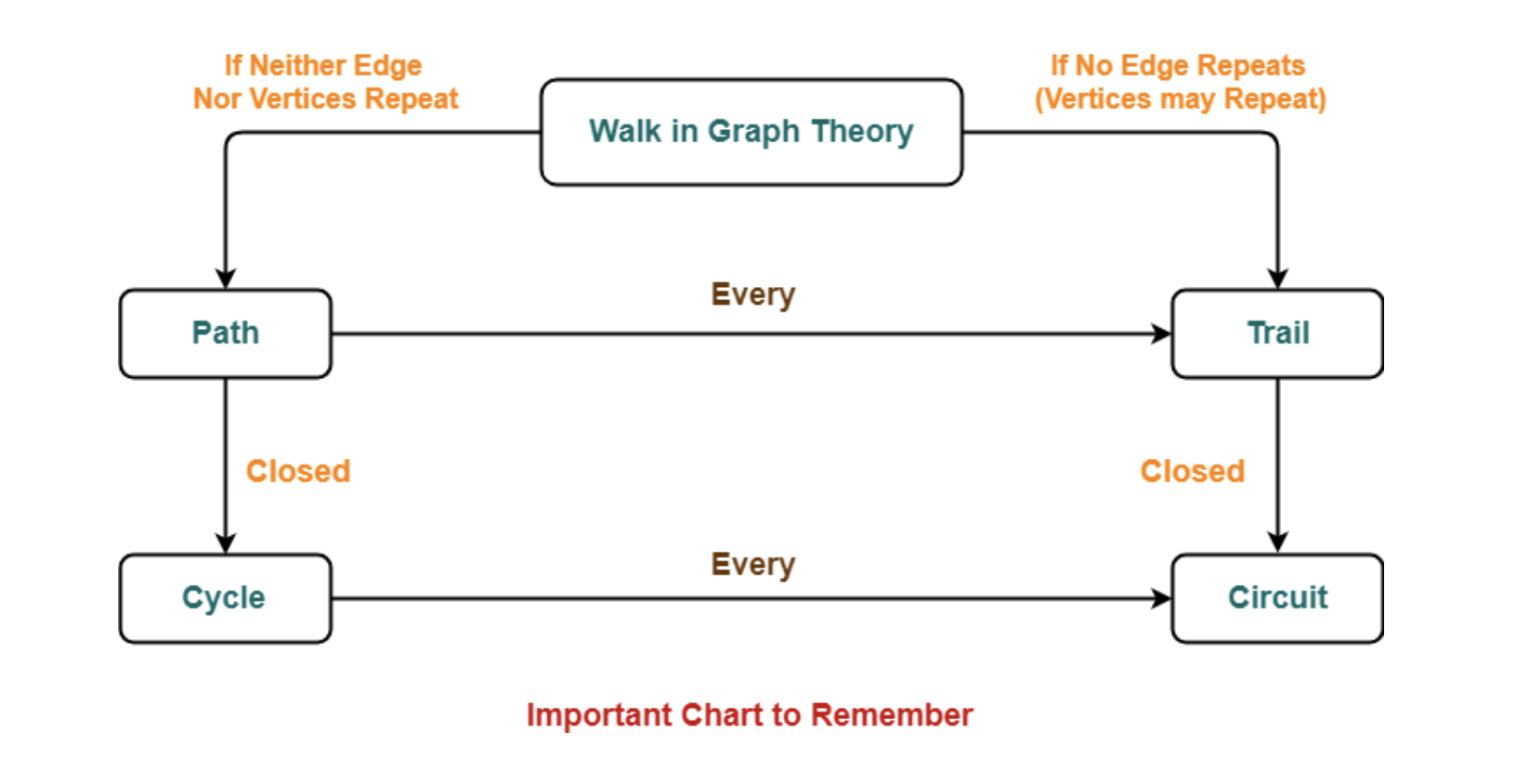

A21 15 0.75Dyadic

- Walk: a sequence of nodes, where each node is adjacent to the next one.

- The length of a walk is the number of edges in it.

- A walk is closed if its first and last vertices coincide.

- Path: a walk that does not repeat nodes or edges.

Finding properties about these aspects is a really important aspect of characterizing a network.

- Trail: an open walk in which no edge is repeated, but a vertex can be repeated.

- Circuit: is a closed trail in which there are no repeated edges, but we can have repeated nodes, other than the first vertex which should be the same as the last.

- Cycle: is a closed path with at least three edges, that is there are no repeated edges, in which the first and last nodes are the same, but otherwise all nodes are distinct.

- Eulerian path: it is a path that traverses each edge in a network exactly once. If, the starting and ending vertices are the same it is called an Euler circuit (or Euler tour).

- Hamiltonian path: it is a path that visits each vertex exactly once. Hamilton cycles are Hamilton paths that start and stop at the same vertex.

In contrast to Euler tours, there is no known efficient procedure by which one can determine whether a graph is Hamiltonian or not - that makes the issue of (non)Hamiltonian graphs difficult.

Counting paths between nodes

One of the most important characteristic of a network are its paths; we can use the adjacency matrix to compute the number of paths leading from one node to the other. We need to raise it to the power of (length of the path): the number of different paths of length from to (vertices) equals the th entry of , where is a positive integer.

Moreover: if

- there are no walks of length going from to .

- is exactly the number of such walks.

Geodesic Paths

- Geodesic path: a path that is a sequence of vertices connected by edges between vertices and with the fewest possible edges. It is the path between two vertices such that no shorter path exists. A geodesic path is also called a shortest path or a graph geodesic.

There is no geodesic path between two vertices if the vertices are not connected by any route through the network; if so, the geodesic distance is infinite () by convention. Geodesic paths are not necessarily unique as it is possible to have two or more paths of equal length between a given pair of vertices.

- Geodesic distance, also called shortest distance, is the length of the geodesic path. It is the shortest network distance between the vertices in question. In other words, the distance between two vertices in a graph is the number of edges in the shortest path connecting them.

An important measure is the average geodesic distance computed among all the nodes: it can tell you how fast is the flow of information between nodes.

- Average Shortest Path Length: the average number of steps along the shortest paths for all possible pairs of network nodes. It is a measure of the efficiency of information or mass transport on a network.

- Eccentricity: the eccentricity of a vertex is its shortest path distance from the farthest node in the graph.

It tells you the maximum distance that might separate two nodes and tells the average maximum distance that information have to travel to reach the extreme areas of a network.

- Radius: the graph radius is the minimum among all the maximum distances between a vertex to all other vertices. A radius of the graph exists only if it has the diameter.

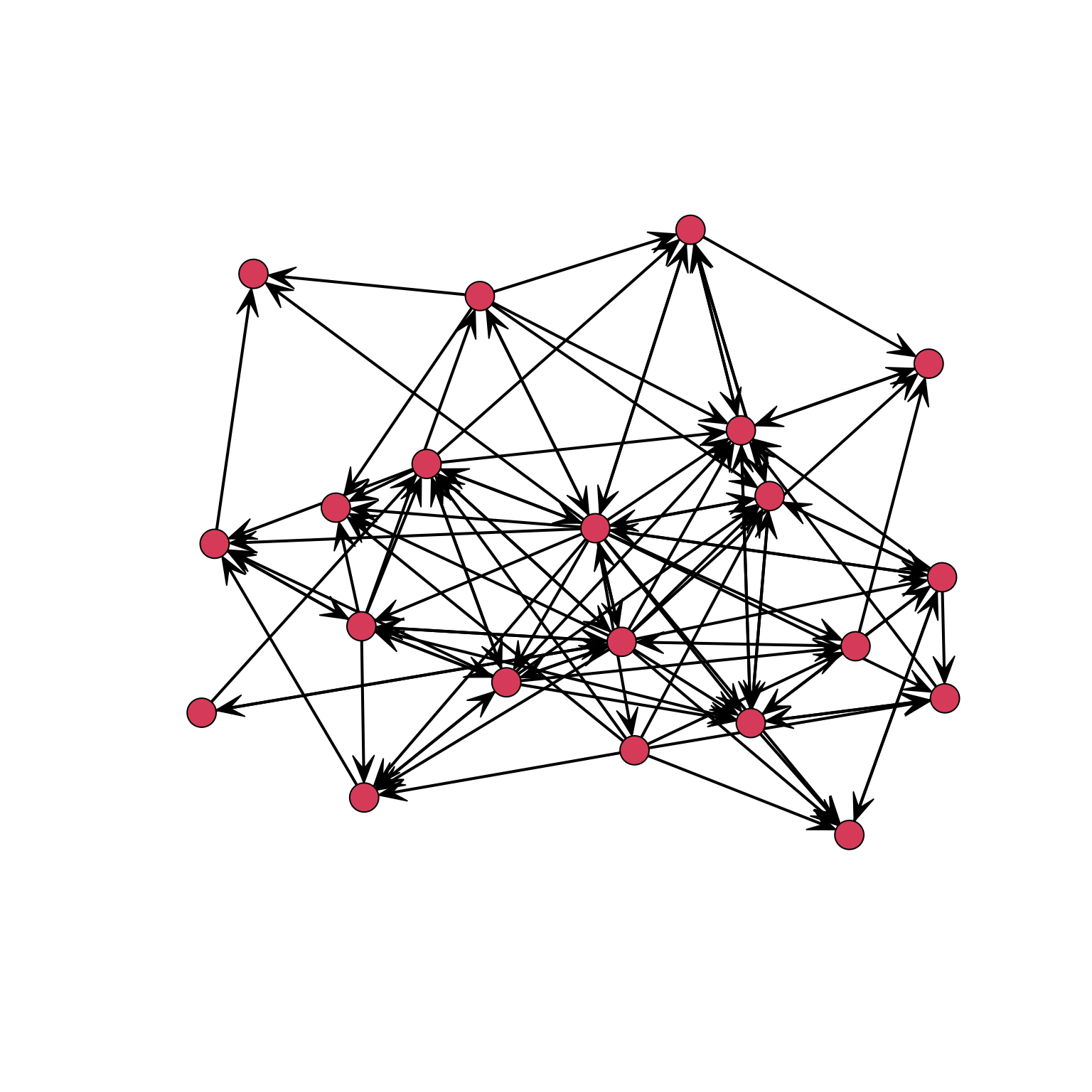

Distance in Digraphs

In directed graphs each path needs to follow the direction of the arrows. In a digraph the distance from node A to B (on an AB path) is generally different from the distance from node B to A (on a BCA path).

How to find the shortest path?

Graph geodesics may be found using a Breadth-first search or using Dijkstra’s algorithm.

The average shortest path can work as a proxy for the speed at which information travels between nodes and along the network. When we are dealing with bigger graphs, we need methods to solve the problem in an algorithmic view, since visual analysis is not feasible.

Breadth-first search: you start from the node of interest and start exploring the network, moving one node at a time and exploring all nodes in the network.

Dijkstra’s algorithm:

- Mark the ending vertex with a distance of zero. Designate this vertex as current and record the distance in

[]after the vertex name. - Find all vertices leading to the current vertex. Calculate their distances to the end. Since we already know the distance the current vertex, mark the distance to the end (now, current vertex).

- Note that once you visited a node, you do not need to visit it again.

- Move to the vertex with the shortest distance; set is as the starting point, then add its distance to its neighbours.

- Beware that travelling to the same node from different starting point might yield different distances.

- The shortest path will be the one that generated the minimum distance.

- Mark the ending vertex with a distance of zero. Designate this vertex as current and record the distance in

Some remarks about Dijkstra’s algorithm:

- It is an optimal algorithm, meaning that it always produces the actual shortest path.

- It is efficient, meaning that it can be implemented in a reasonable amount of time; in this case, , or the number of nodes.

- It takes around calculations, where is the number of vertices in a graph.

This algorithm is the default choice in R when computing the shortest distance. It is also used to compute the average shortest path.

It is possible that there is no path at all between a given pair of vertices in a network: these are called disconnected networks. The implication is that some areas are not connected to the rest of the network. This property can change over time.

Connected components

The adjacency matrix of a network with several components can be written in a block-diagonal form: all non-zero element are confined in sub-matrices (squares), with all other elements being zero.

The vast majority of real networks host most of their nodes in a single connected component, also known as GCC (Giant Connected Component).

Laplacian Matrix

Or, equivalently, the matrix:

Connectivity

A directed graph is strongly connected if there is a path from a to b and from b to a whenever both are vertices in a graph. A directed graph is weakly connected if there is a path between any two vertices in the underlying undirected graph.

All this indicators and measures will be used to study the statistical properties of networks at scale.

Triadic characteristics

Local clustering: clustering associated with a given individual.

In other words, we are counting and computing all close triads of elements all connected to each other and dividing it by all the connections of a given node (open triads). It is a normalized measure.

The average clustering of a network can also be computed.

Triadic closure

The triadic closure is the tendency, especially in social system, to close triangles. The tendency to connect is stronger among three nodes, if two of them are already connected.

This implies that real social networks tend to have a large number of closed triangles and thus a high average clustering; such effect might be caused by:

- Trust-transitivity.

- Enhanced opportunities

- Decreasing interaction costs.

Triadic balance

A key dynamic process in signed networks: closed triads tend to be stable if they involve an odd number of positive relations. Unbalanced triads tend to evolve to a balanced one, by changing one interaction signs or opening the triad.

It is a very powerful dynamic which shapes the topology of networks.

Assortativity

It measures the tendency of nodes to be connected with other nodes with similar degree.

- Positive assortativity: high degree nodes tend to be connected with high degree nodes (and low degree nodes with low degree nodes).

- Negative assortativity (dissortativity): high degree nodes tend to be connected with low degree nodes.

Social networks are typically assortative, which technological networks dissortative.

It can be quantified using the Pearson coefficient (correlation) of the degrees of adjacent nodes.

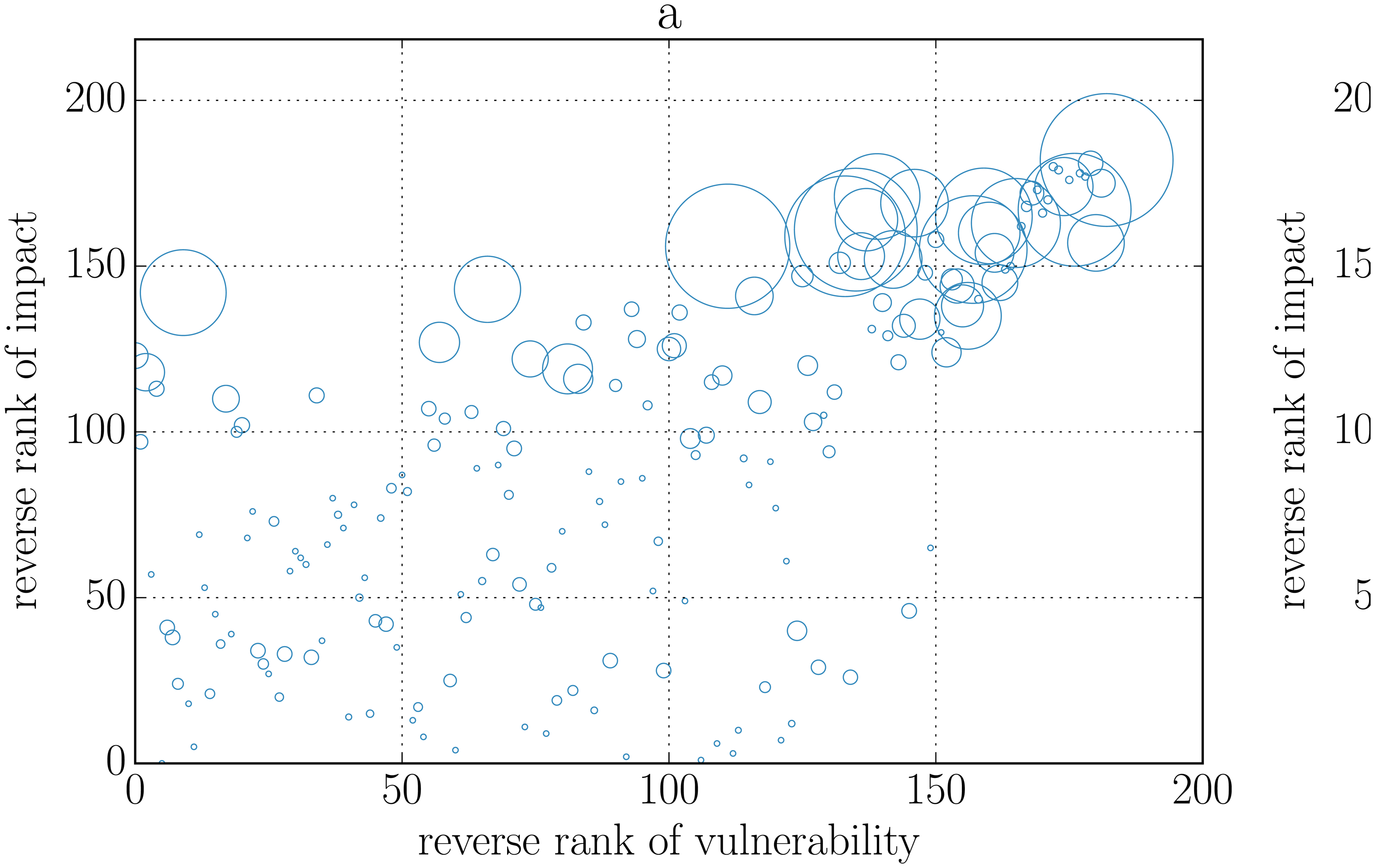

The assortativity can be computed only for nodes with a given degree and it is possible to visualize the general tendency of your network in the slope of the following function, which looks at the average nearest neighbour degree of nodes with given degree:

This is equivalent to , which is the conditional probability that an edge of node with degree points to a node with degree .

Computing this variables and statistical measures for different networks allow to create classifications and opens the way for more analysis.

Why are these measures important?

- Average Shortest Path:

- It is a good indication of how fast information, diseases, goods, et. spread in the network.

- Clustering:

- Connected triads are important in social networks.

- In social networks clustering tends to be high, due to triadic closure

- “If A is connected with B and with C it is very likely that B and C are themselves connected with each other”.

- Important social phenomenon happen on triads, e.g. triadic balance:

- “The friend of my friend is my friend”, “The enemy of my enemy is my friend”, “The friend of my enemy is my enemy”

- A triad is balanced if the number of +s is odd.

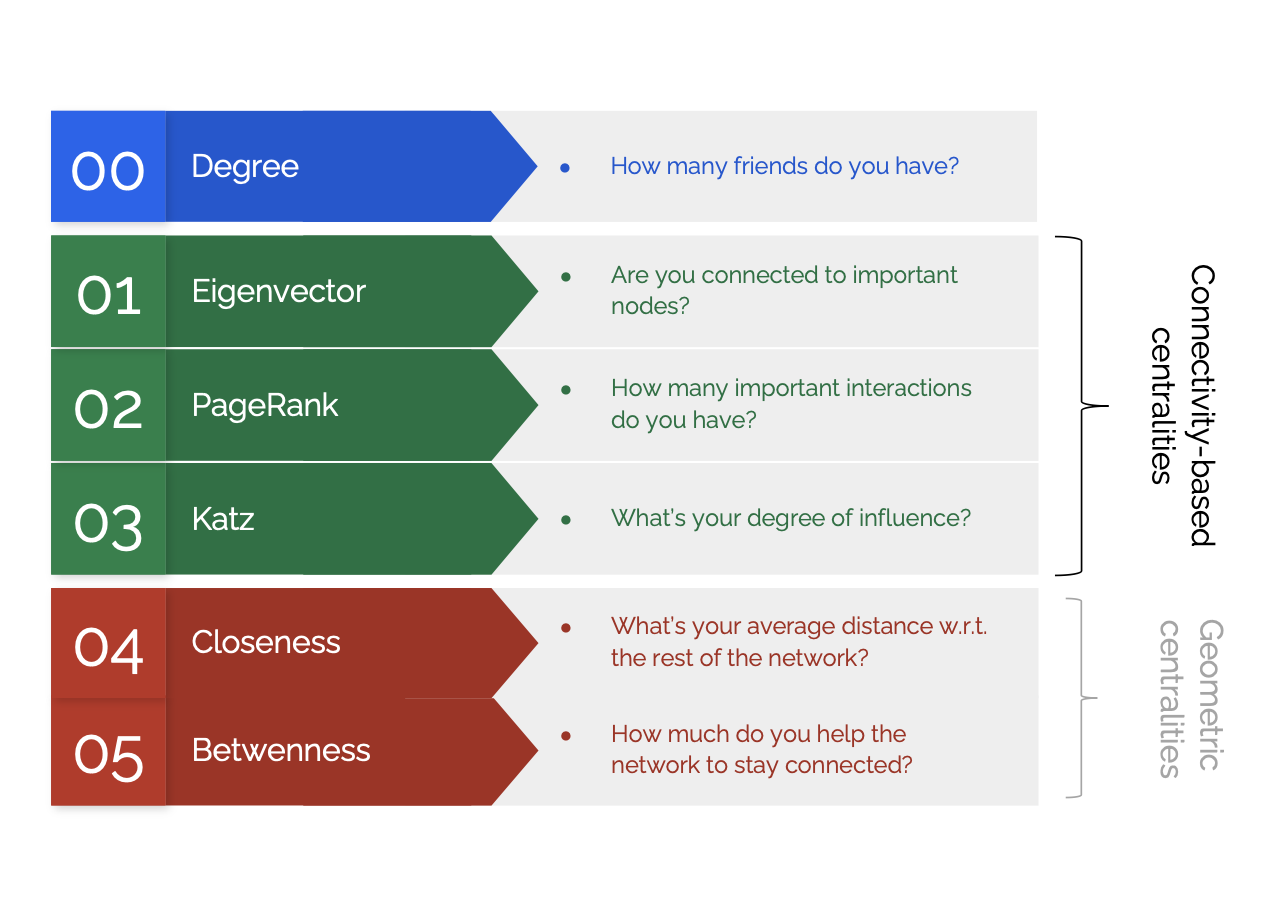

Centrality

We measure nodes importance using so-called centrality: it is a proxy to capture which are the most important node. It has nothing to do with position in a network. There are different possible definitions, each measure varying by context and purpose. It is a local measure, relative to the rest of the network: it is not expressing a global property.

- Metric for network analysis.

- Element-based.

- It can be used to rank individuals in a network, inducing a centrality ranking on the nodes not based on the nature of the node.

- Topological measure that scores nodes by their importance as a part of the big network.

Centrality measures are widely used:

- You can target central individuals with marketing or other preventive measures.

- You can attack/defend differently central nodes (e.g. strategical infrastructures).

- Epidemiology control (e.g., target prostitutes with s.t.d. prevention campaigns).

- Power in exchange networks.

The key question is:

Who’s important, based on their network position?

This depends on the context and purpose of the measure.

In each of the following networks, as higher centrality than according to a particular measure. In other words, position (e.g. choke points) in a network matters when considering centrality.

It is always possible, once fixed a contest, to measure or distance from a focal node (a node which is particularly important). An example of this is the Erdös number.

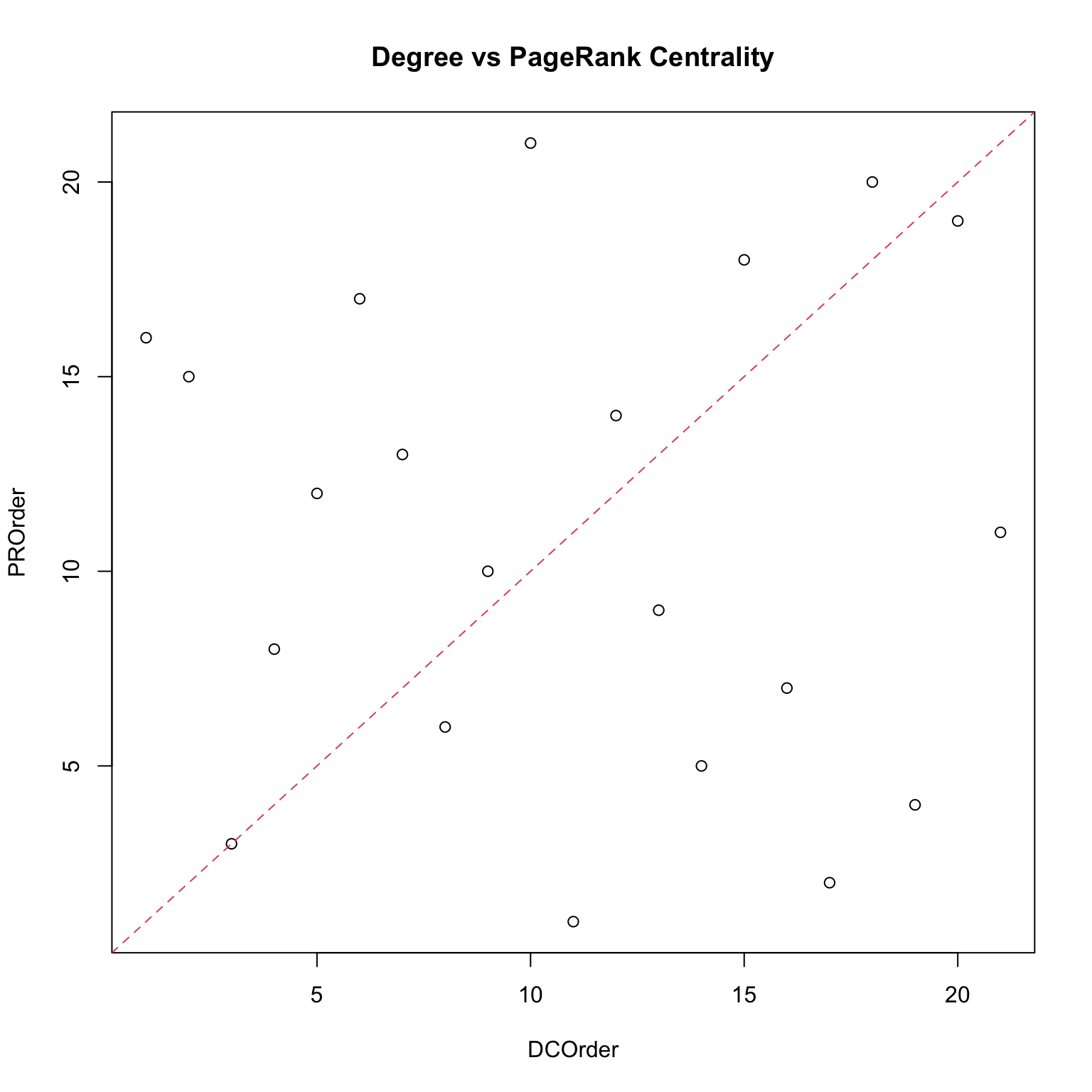

Degree centrality

Node degree is one of the basic centrality measures: it is equal to the number of node neighbours.

A high degree centrality value of a given node implies that the node has many neighbours:

- It is then closely related to its neighbours (in weighted similarity graphs).

- However, this measure does not capture cascade effects, such as “I am more important if my neighbours are important”.

How many neighbours does a node have? Often it is enough to find important nodes, but not always.

- Twitter users could have most contacts which are spam-bots.

- The webpages/Wikipedia pages with most links are often lists of references.

In other words, the centrality is relative to the task and measure you want to obtain.

Moreover, it is just a local measure of the importance of a node within a graph: node degree is local, not global and therefore it does not show us the global picture.

Normalize degree centrality

The normalized degree centrality is the degree divided by the maximum possible degree, expressed as a percentage. The normalized values should only be used for binary data.

The degree centrality thesis reads as follows:

- A node is important if it has many neighbours, or, in the directed case, if there are many other nodes that link to it, or if it links to many other nodes.

- If the network is directed, we have two versions of the measure:

- In-degree is the number of in-coming links, or the number of predecessor nodes.

- Out-degree is the number of out-going links, or the number of successor nodes.

Typically, we are interested in in-degree, since in-links are given by other nodes in the network, while out-links are determined by the node itself.

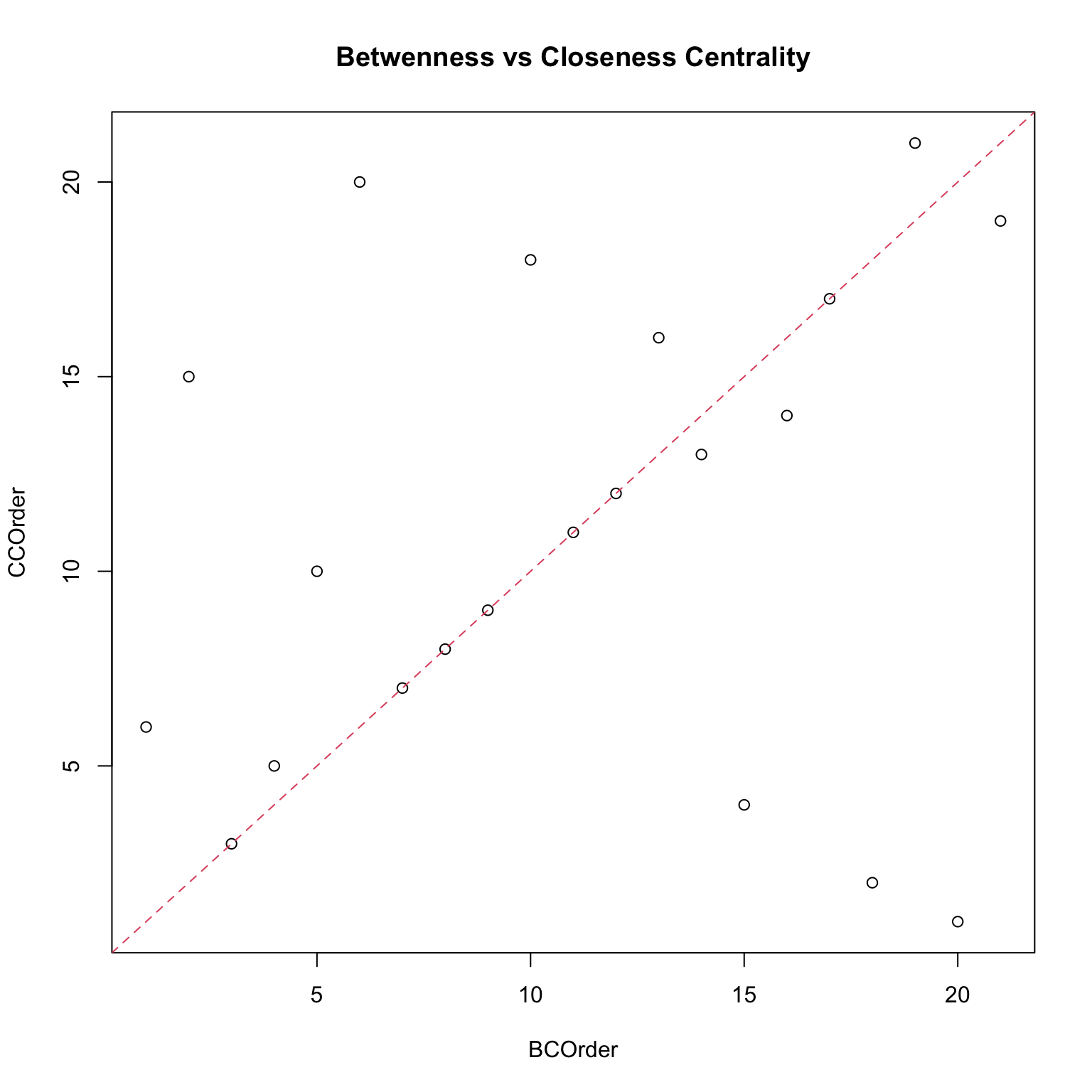

Closeness centrality

Since in each network is improbable to be connected to every other peer, we need the concept of closeness centrality, which measures the topology of a node and its position relative to other nodes and the center of the network. In other words: one wants to be in the “middle” of things, not too far from the center. A shop, for example, might wish to be positioned close to every destination as it makes it easy to reach from its customers.

For this use case, closeness centrality would be a good metric to choose your location since it measures the average path length. We want to understand which nodes have an easier life in sending information to other nodes.

To compute it:

- Calculate all shortest paths starting from that node to every possible destination in the network.

- Sum these distances to obtain the total distance measure.

- Take the average of this value by dividing it by the number of all possible destinations, .

- Take the opposite (inverse) of what we have just computed.

The closeness centrality of is nothing more than its inverse average path length.

- Farness: average of length of shortest paths to all other nodes.

- Closeness: inverse of the Farness (normalized by the number of nodes).

The mathematical definition is:

The higher your closeness centrality value, the more your node is close to other nodes in your network: the highest value is 1. Two limitations however are associated with this measure:

- It spans a very small dynamic range: in most networks, the range (max - min) is small.

- In disconnected graph, centrality is zero for all nodes.

Betweenness centrality

We want to use the whole topology to define the centrality of a node: it is one of the most important measures. Betweenness counts paths: we still calculate all shortest paths between al possible pairs of origins and destinations, although we count the number of paths passing through of which is neither an origin nor a destination. Such a measure then works as a proxy of the relevance of a node in a network connections and its relevance in the spread of information.

The main assumption is that important vertices are bridges over which information flows. If information spreads via shortest paths, important nodes are found on many shortest paths.

We wish to measure the percentage of shortest path where our node lies in them. A node with high betweenness centrality may have considerable influence over the information passing between other nodes: it is a much better measure of influence over the whole network, not only at a local level.

Nodes with a high BC are also called network broker: the network structure is dependent on its presence and so is the flow of information.

How to compute: it is the proportion of shortest paths between all other pairs of nodes that the given node lies on.

However, the shortest path is not necessarily unique.

If there are alternative paths not passing through the node, then the path contributes only to the node’s centrality.

Another issue regards hubs: in the context of a network, a hub is a node with a large degree, meaning it has connections with many other nodes. However, even though they might have a high BC, this is not necessarily implying that they work as brokers.

How many paths would become longer if node would disappear from the network? How much is the network structure dependent on s presence?

Since real world networks have hubs which are closer to most nodes, the shortest paths will use them often.

- As a result, betweenness centrality distributes over many orders of magnitude, just like the degree.

- Unlike the degree, it considers more complex information than simply the number of connections.

The betweenness centrality of a node scales with the number of pairs of nodes in the network: we can find different measures, such as standardized, normalized, scaled betweenness centrality.

Scaled formula:

Its values are typically distributed over a wide range: its maximum value occurs when the vertex lies on the shortest path between every other pair of vertices, such as in stars networks.

If the graph is not connected, it is by convention set to 0 for unreachable pairs. Betweenness centrality shares with closeness a drawback: computational complexity, which means that for large structure it is not possible to compute.

Flow betweenness centrality

Used generally for information flow.

Betweenness only uses geodesic paths: yet, information can also flow on longer paths. While betweenness focuses just on the geodesic, flow betweenness centrality focuses on how information might flow through many different paths. The flow betweenness is a measure of the contribution of a vertex to all possible maximum flows.

The flow approach to centrality assumes that actors will use all the pathways that connect them. For each actor, the measure reflects the number of times the actor is in a flow (any flow) between all other pairs of actors (generally, as a ratio of the total flow betweenness that does not involve the actor).

Edge betweenness centrality

In addition to calculating betweenness measures for actors, we can also calculate betweenness measures for edges.

Edge betweenness is the degree to which an edge makes other connections possible.

Centralities: a comparison

Which centrality measure is really useful? It depends on the objective, which is what we want to measure.

- In a friendship network, degree centrality would correspond to who is the most popular kid. This might be important for certain questions.

- Closeness centrality would correspond to who is closest to the rest of the group, so this would be relevant if we wanted to understand who to inform or influence for information to spread to the rest of the network.

- Betweenness would be relevant if the thought experiment was which individuals would have to be taken out of the network in order to break the network into separate clusters.

Note that none of them is a wrong measure, they just reveal different features: this means that it is usually a good practice to compute a set of them. Moreover, each centrality measure is a proxy of an underlying network process. If such a process is irrelevant for the network then the centrality measure makes no sense.

They are best used to explore a network.

Connectivity based centralities

Eigenvectors centrality

An eigenvector is a vector whose direction remains unchanged when a linear transformation is applied to it.

Are you connected to important nodes?

- Having more friends does not by itself guarantee that someone is more important.

- Having more important friends provides a stronger signal.

This kind of connections also considers the importance of other nodes. In a network, this however implies a recursive problem.

Relationships with more important nodes confer more importance than relationships with less important nodes.

The eigenvector centrality is computed by calculating an eigenvector on the adjacency matrix: it is a generalization of the degree centrality and characterizes the global prominence of a vertex in a graph. The main idea is to compute the centrality of a node as a function of the centralities of its neighbours: the centrality of a node is proportional to the sum of scores of its neighbours.

The eigenvector centrality of each node is given by the entries of the leading eigenvector (the one corresponding to the largest eigenvalue). It is extremely useful, one of the most common ones used for non-directed networks: however, it does not work in acyclic (directed) networks, unless we are in a network in which every node can be reached: we need only one weakly connected component.

Katz centrality

A major problem with eigenvector centrality arises when it deals with directed graphs: each of the nodes pass some of its importance to its peers; in directed networks, some node will only be able to send out importance and not to receive it.

EC works well only if the graph is (strongly) connected.

Katz centrality works around this problem by giving a small amount of centrality for free, the minimum of centrality a node can transfer to other nodes by referring to them.

- Degree centrality of node measures the local influence of a node.

- Eigenvector centrality measures the global influence of a node within the network.

- Katz centrality covers both the local and global influence of .

A node is important if it is linked from other important nodes or if it is highly linked.

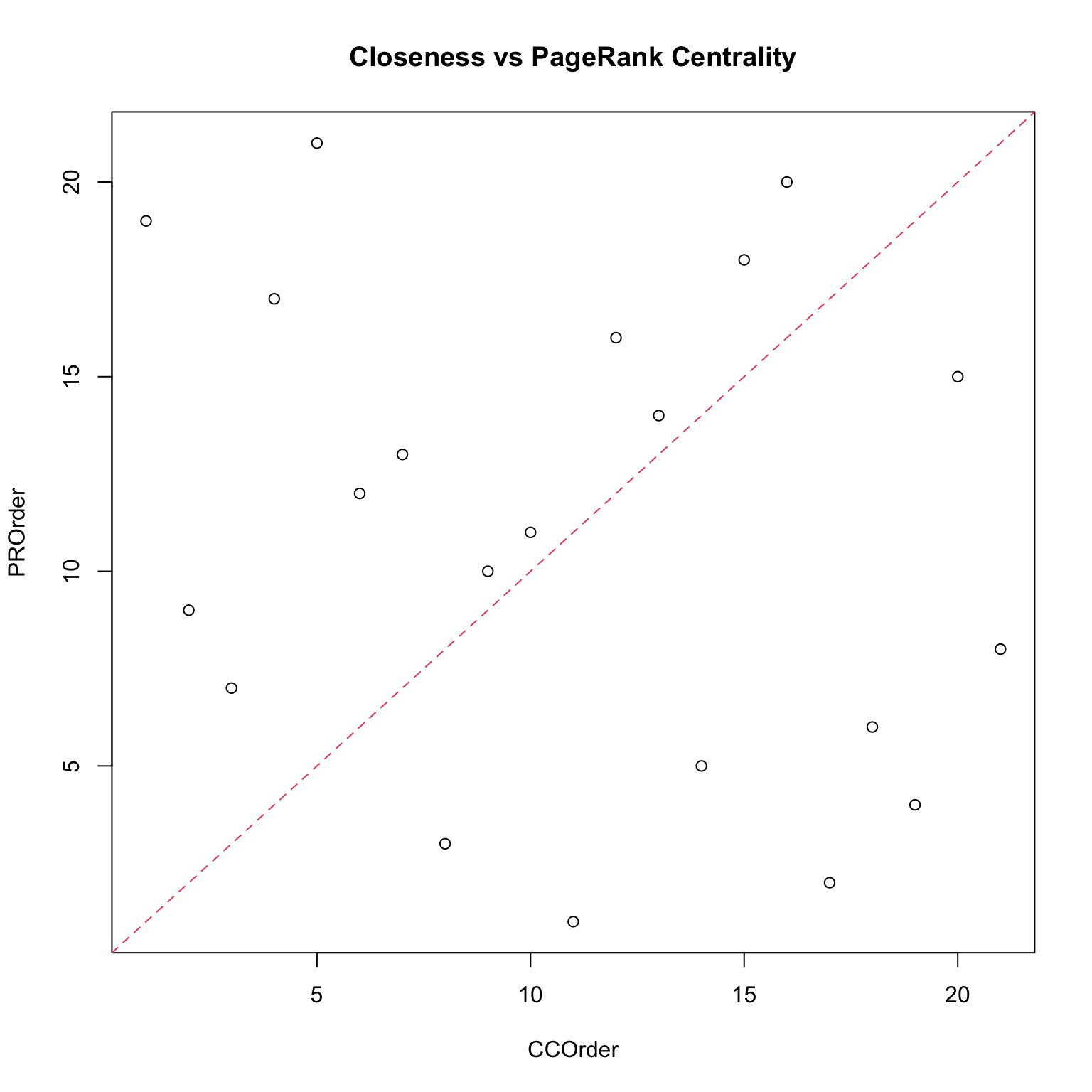

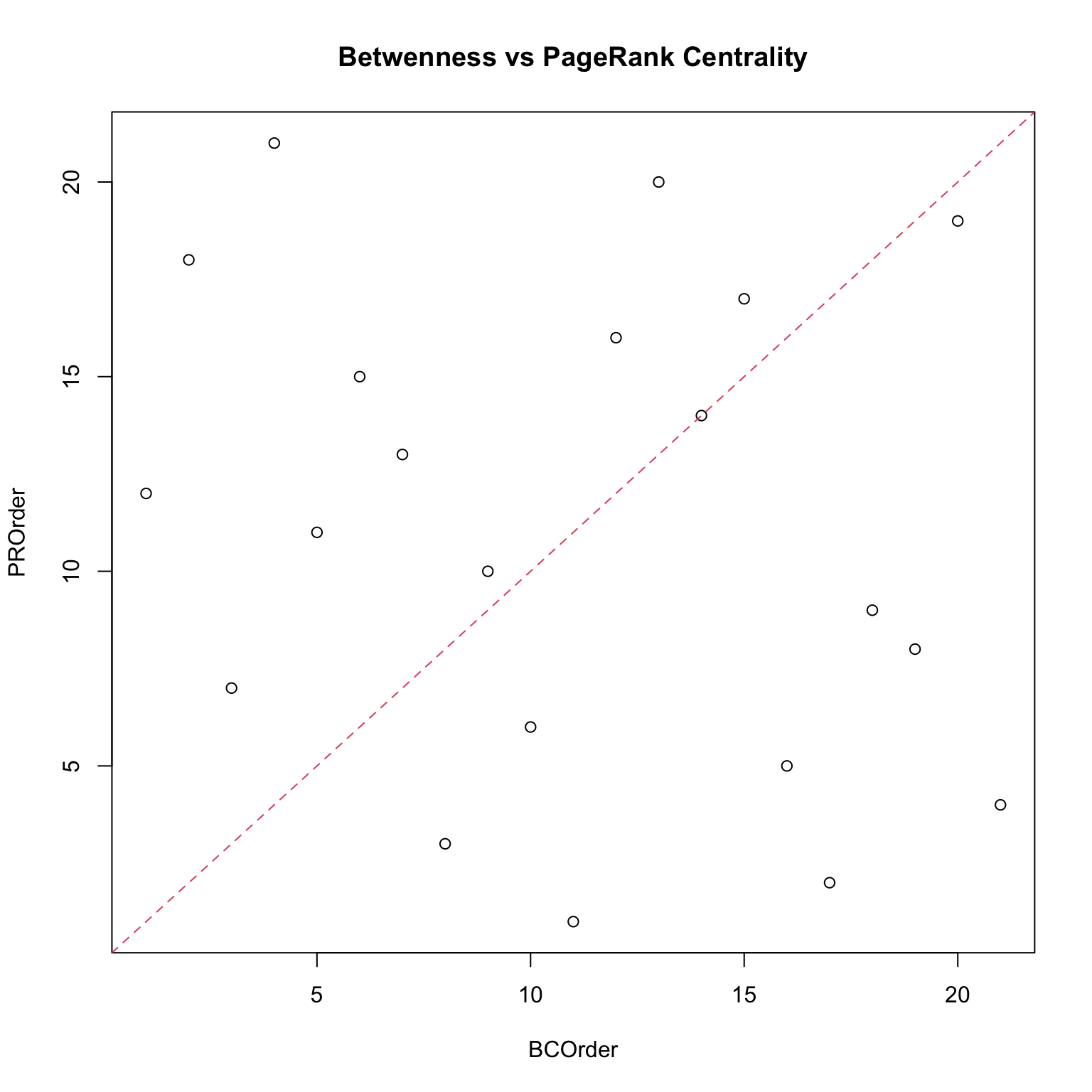

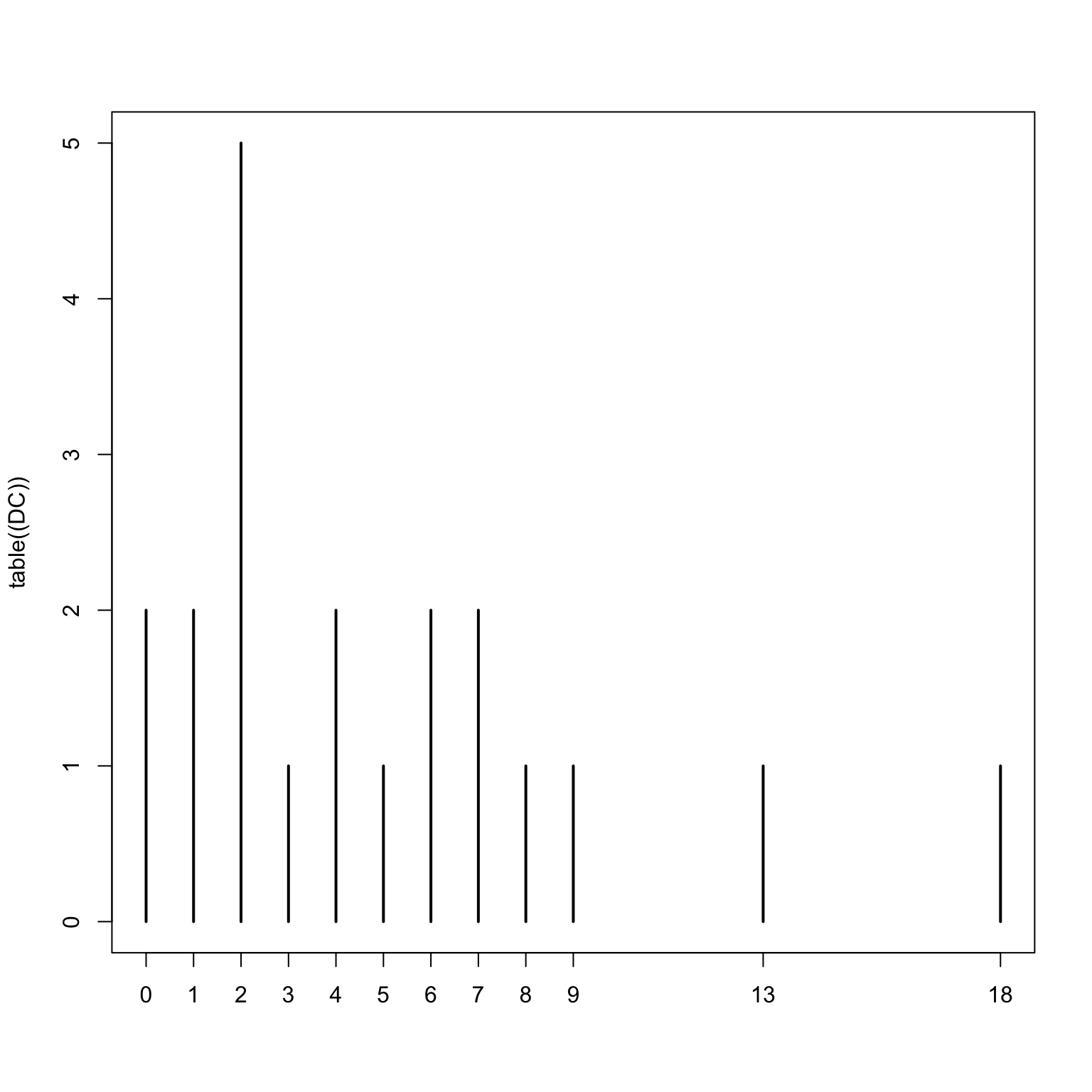

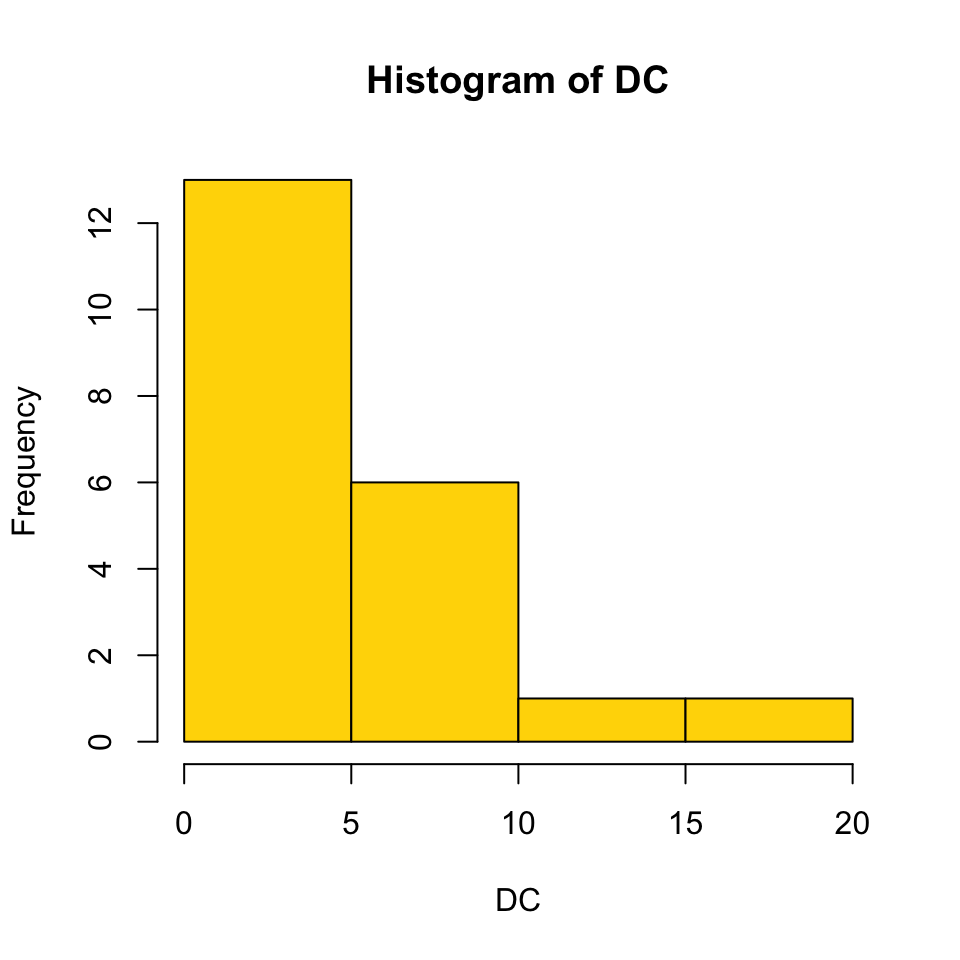

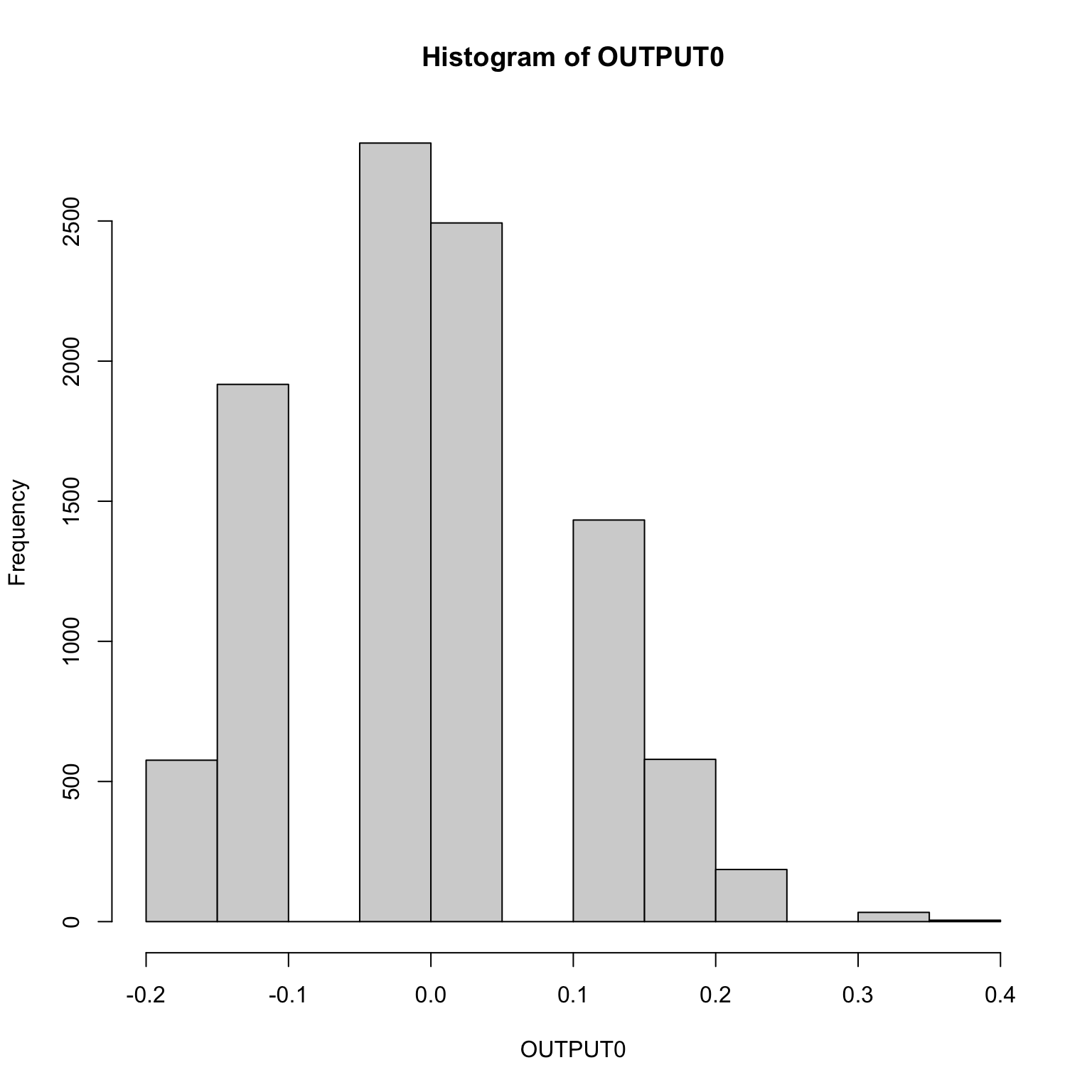

No vertex has zero centrality and will contribute at least to other vertices centrality: the main issue is that (dampening factor) and should be chosen before the computation: